The Physical AI Revolution Part II: Dawn of the True Robotics Industry

Understanding the New Robotics Ecosystem

Introduction: On the Edge of Automation

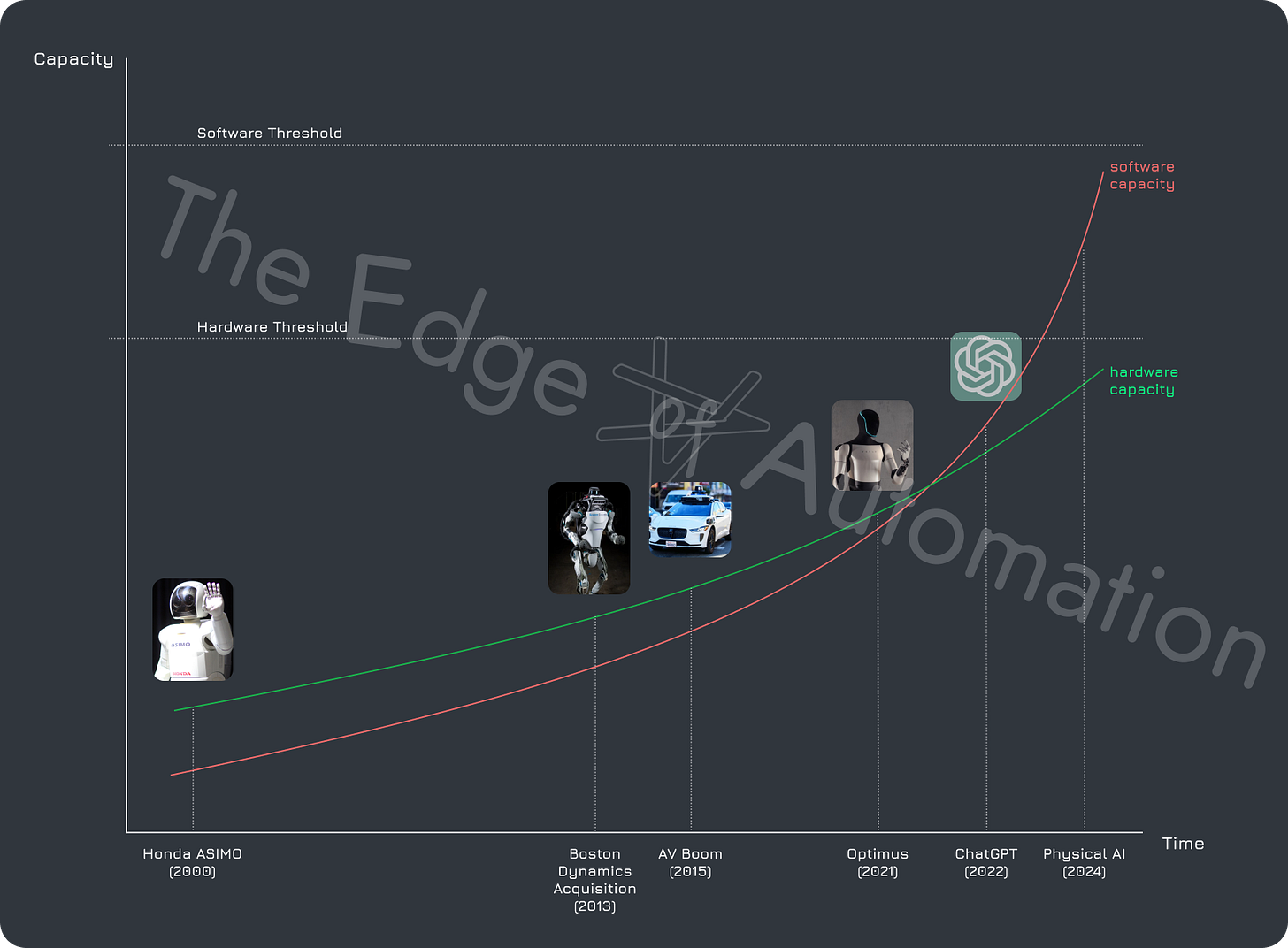

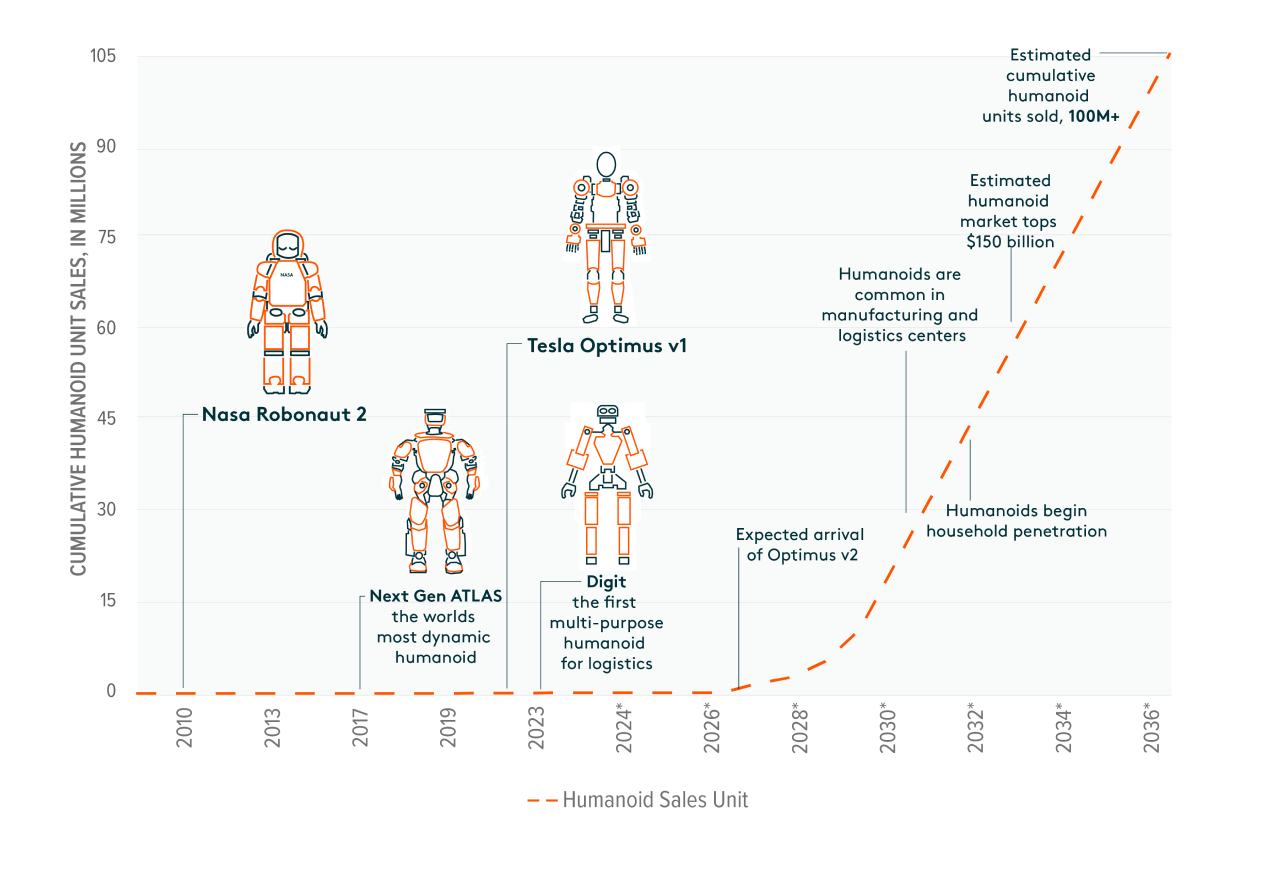

Every few weeks, I hear someone confidently proclaiming "2025 will be the year of AI-powered robots". In many ways, they're right – we're witnessing unprecedented momentum in Physical AI, massive bets on humanoid robotics, and ambitious visions of robot butlers entering our homes. But this wave of excitement is remarkably new, and its sudden emergence masks a deeper truth about where we actually stand in the history of automation.

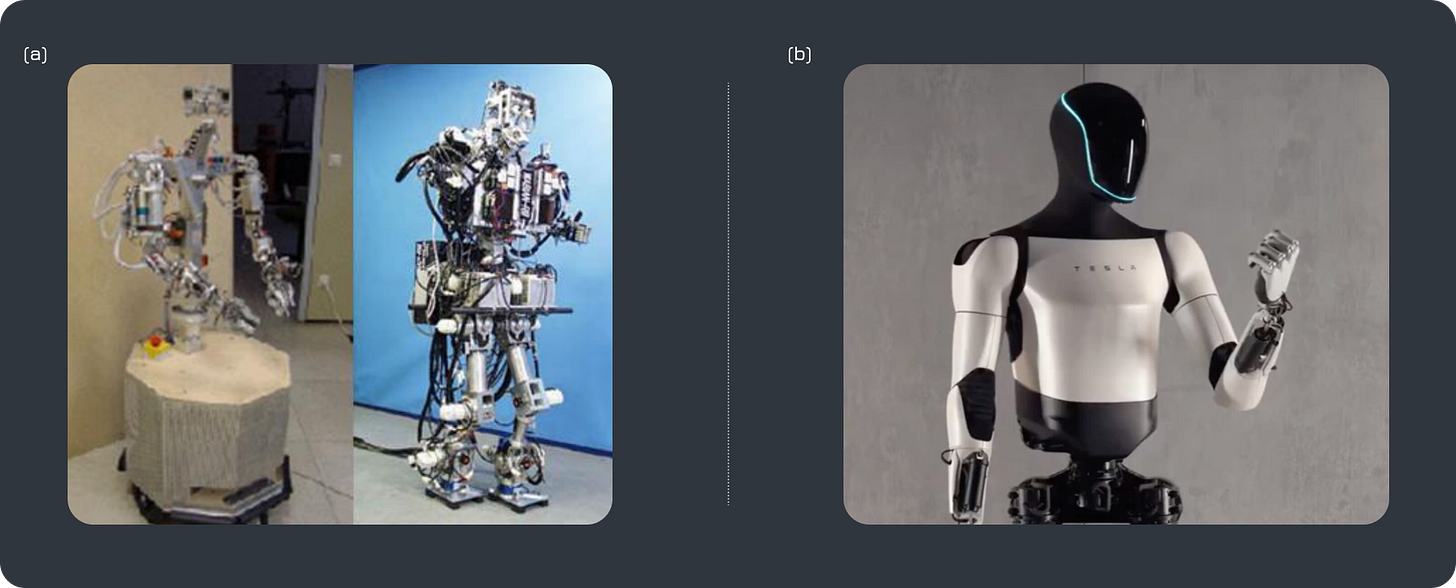

Not long ago, robotics was considered an unattainable dream, far too distant from commercialization to be taken seriously. Even the most successful examples remained confined to specially-designed factory floors, while the autonomous vehicle boom, despite consuming billions in capital, repeatedly fell short on its grand vision. More fundamentally, none of these attempts created robots that could perform one human unit of work. They were compromises and pale imitations of the capabilities people imagined when people dreamed of robots.

The challenges seemed insurmountable. Sensors and actuators were inefficient and fragile, failing to provide the dexterity required for human-level capabilities. Software systems lacked the sophistication to handle unpredictable environments. The decades-long hobbyist culture created an environment where commercial breakthroughs seemed perpetually out of reach. As recently as 2021, even optimistic projections didn't see functional humanoids arriving before 2030. The robotics industry didn't really exist, and is still yet to be born.

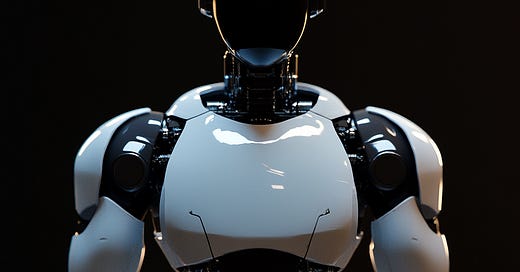

A new wave of robots is approaching fast. NVIDIA’s announcements in GTC 2025 (March) will trigger unprecedented momentum in Physical AI. Once it begins, there’s no stopping it.

But, no one could have predicted how dramatically the timeline would accelerate, pulling the future of robotics forward by half a decade. We now stand at the threshold of what promises to be the first true robotics industry in human history. Technological and geopolitical factors have aligned to create a wave of automation that will alter the very fabric of civilization. Within a year or two, we'll see mass-produced, general-purpose robots capable of serving as drop-in replacements for human labor.

The transformation we're about to see is far more profound than just another technological boom. As robots emancipate humanity from manual work, they will cause a perfect storm that will dismantle the age-old constraints of human physical labor that form the very backbone of our civilization. While this seismic shift presents a once-in-a-lifetime opportunity for a few visionaries, most will find themselves on the sidelines of history, forced into this cruel race against their will. There is no middle ground – you either survive or perish.

As an engineer and entrepreneur building physical AI technologies for this emerging industry, I feel compelled to share what I know about this pivotal shift. My goal is to provide a concrete understanding of where we stand, so we can prepare for what's coming. The tunnel ahead is long and dark, but by understanding the forces at play, we might just find our way through to the other side.

Contents

This article is the second in a series of five dedicated to covering various aspects of the upcoming humanoid/robotics industry. Part 1 traced the field's evolution through five distinct phases: from early dreams of the 1970s, through cycles of disappointment with Boston Dynamics and Google, to Tesla's 2021 reboot, the impact of generative AI, and the acceleration of 2024.

Throughout this piece (Part 2), we'll examine the forces shaping the emerging robotics industry. We'll analyze the technological breakthroughs and geopolitical changes converging to create this pivotal moment, alongside the products and players poised to determine the trajectory of the ecosystem.

The article covers five key areas:

What is a Robot: Defining robotics for the age of physical AI and examining why this technological breakthrough represents a fundamental shift in human civilization.

Five Levels of Autonomy: Tracing the evolution of autonomy from Structured Manipulation of early industrial automation (Level 1), through the age of Foundational Robotics we're about to enter (Level 3), to Autonomous Systems of fully-orchestrated supply chains, cities, and nations (Level 5).

Why It Never Worked: The obstacles that held back the creation of machines with human-level capabilities.

What’s Different Now: The technological readiness and geopolitical imperatives driving momentum toward the birth of the first true robotics industry.

Anatomy of the Emerging Industry: Products, players, and competitive dynamics that will shape the industry's turbulent early years.

Future articles will explore potential evolution scenarios of the industry, implications for civilization, and connections to artificial general intelligence (AGI). But first, we must understand what truly differentiates this moment in history – why after decades of false starts and failed promises, we finally stand at the dawn of a real robotics revolution.

I. What Is a Robot and Why Do We Care?

1. Beyond Assembly Lines: Redefining the Robot

When most people think of robots, they envision industrial arms welding car frames or autonomous vacuums roaming living room floors. While these examples represent important milestones in automation, they capture only a fraction of robotics' true potential. A robot, in its fullest sense, is a machine designed to serve as a drop-in replacement for human labor: one that navigates unstructured environments, manipulates objects with human-like dexterity, and executes complex tasks with minimal oversight.

This vision of robotics gives rise to "Physical AI" – the fusion of mechanical capability with software intelligence. Just as humans integrate sensory input, cognitive processing, and physical action to interact with the world, physical AI combines advanced sensors, sophisticated algorithms, and precise actuators to perceive, reason about, and shape its surroundings. These systems transcend pre-programmed routines, developing the ability to learn, adapt, and respond to novel situations. The true promise lies in this unity of agile hardware and advanced intelligence: machines that can transform any human-centric environment into their workspace without requiring radical modifications.

This marks a fundamental departure from traditional automation, which has historically confined itself to specialized machines performing narrow tasks in controlled settings. While such automation improved efficiency in specific contexts, it never achieved the versatility needed to handle the full spectrum of human work. Physical AI bridges this gap through an essential trinity of capabilities: perception, cognition, and manipulation. By empowering machines to operate in dynamic environments without constant reconfiguration or human babysitting, it represents not an incremental upgrade but a revolutionary leap toward systems capable of matching, and potentially exceeding, human labor capacity.

2. General-Purpose Mobile Manipulation

The next wave of robotics transcends traditional automation's narrow focus on repetitive tasks. At its core lies General-Purpose Mobile Manipulation (GPMM) – the sophisticated fusion of movement and dexterity that enables machines to operate as true replacements for human labor. Unlike specialized industrial robots that perform fixed functions in meticulously-configured settings, true robots adapt seamlessly to human environments, handling diverse tasks that involve both navigation and maneuvering without hardware modifications.

A robot is a machine that can perform one human unit of work. This definition requires General-Purpose Mobile Manipulation (GPMM) – the ability to both navigate and execute diverse tasks in unstructured environments with a single, versatile physical platform.

Multipurpose drones represent another critical vector, evolving beyond simple surveillance or delivery tasks toward active environmental interaction. Advanced aerial platforms equipped with manipulation capabilities can perform infrastructure maintenance, construction support, or emergency response operations. Their ability to coordinate in swarms while handling complex physical tasks opens entirely new possibilities for automated labor, particularly in environments too dangerous or inaccessible to human workers.

General-purpose mobile manipulation platforms share a crucial characteristic: they require no more setup or modification than human workers would need. Rather than forcing environments to adapt around machines, they integrate seamlessly into existing workflows and spaces. This compatibility, combined with increasingly sophisticated Physical AI, enables them to handle the dynamic, unstructured challenges that have historically required human adaptability. The emergence of these capable platforms signals robotics' evolution from isolated demonstrations into true industry.

3. Why Humanoids Matter

Among various form factors, humanoids represent the most direct path toward widespread automation. While critics argue that the human form is suboptimal for many physical tasks, this perspective misses a crucial reality: our world is fundamentally designed around human proportions and capabilities. From doorways and staircases to tools and workstations, our environment assumes bipedal mobility and two-handed manipulation. The humanoid form is uniquely positioned for zero-friction integration into existing spaces, with contemporary models featuring increasingly robust hardware and advanced physical AI capabilities.

The economic advantages of the humanoid approach extend beyond compatibility. Developing a single versatile platform rather than numerous specialized devices reduces R&D costs and accelerates innovation through concentrated effort. This unified approach enables economies of scale in manufacturing and maintenance while creating a standardized ecosystem for software development. As capabilities improve, these benefits compound across all deployment scenarios, allowing for rapid adaptation to new tasks without physical redesign. The result is an autonomy platform with unprecedented versatility and significantly lower implementation costs compared to task-specific alternatives.

The implications stretch beyond practicality. As humanoids establish themselves in workplaces, they'll create powerful feedback loops reshaping physical infrastructure. While robots will initially adapt to human environments, spaces will gradually evolve to optimize for robots. This transformation enables a smooth transition from human labor to automated systems, setting the stage for more radical innovation: modular robots with interchangeable components, and eventually, orchestrated networks of entire supply chains, cities and nations. Humanoids represent not just an intermediate step, but the crucial bridge propelling us toward a new technological horizon.

The social and psychological dimensions of humanoid design prove equally significant. People naturally understand and relate to human-like forms, making humanoids easier to integrate into collaborative workflows. This inherent familiarity reduces training overhead and accelerates acceptance across diverse industries. While future automation may eventually evolve toward more specialized morphologies, humanoids serve as an essential intermediary that will not only enable the initial wave of widespread robotics deployment but also reshape civilization's relationship with physical labor.

4. The Imminent Surge

We stand at the threshold of the most radical transformation in human history. As robots move beyond isolated, heavily constrained applications into mainstream deployment, their impact will ripple through every aspect of our society – from manufacturing floors to supply chains, from domestic spaces to urban infrastructure. The pace of this revolution will likely accelerate exponentially as each breakthrough builds upon previous innovations. The convergence of advanced hardware, sophisticated AI models, and powerful on-board compute will yield machines that can be deployed across diverse settings without months of custom engineering.

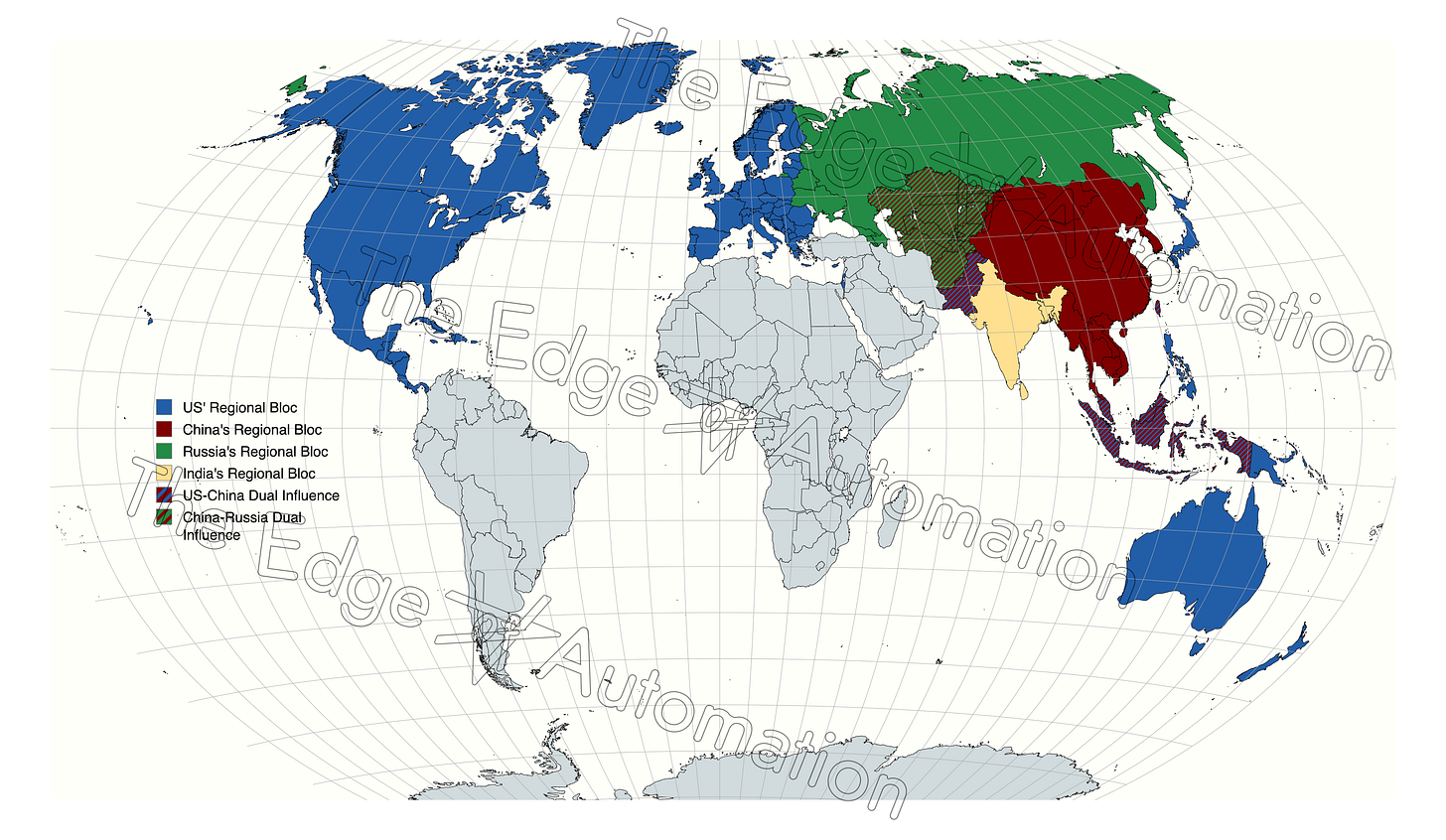

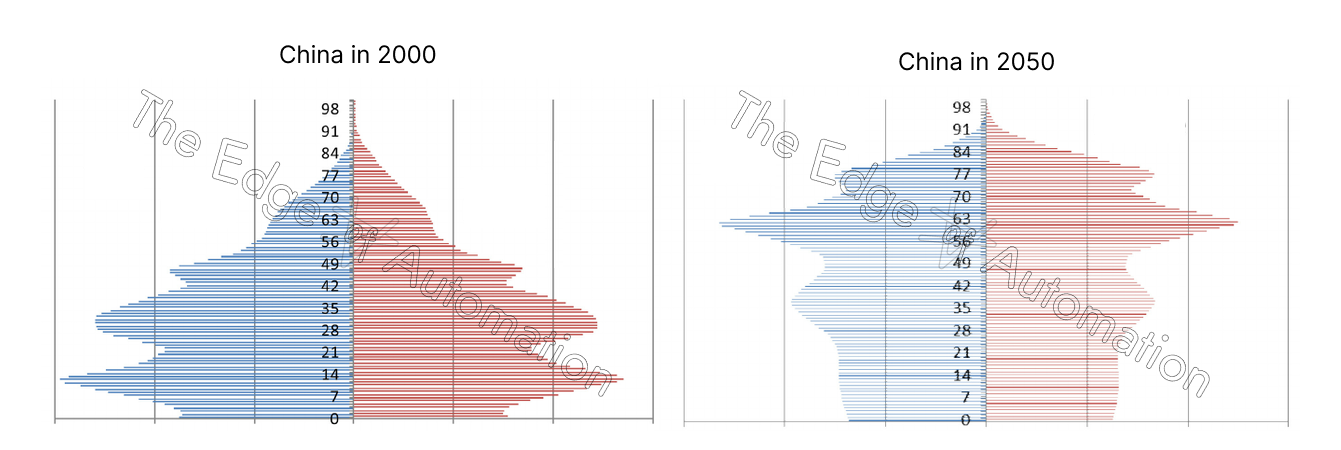

This shift represents more than technological progress. It means the dissolution of humanity's fundamental constraint: the scarcity of physical labor. Just as the internet rewrote the rules of information exchange, truly capable robots will trigger a global chain reaction that transforms our assumptions about productivity, labor value, and economic organization. Nations will race to secure the components of this emerging industry, forging new alliances and rivalries in their quest for manufacturing supremacy. The impact will be immediate and far-reaching, from 24/7 automated factories to the complete restructuring of supply chains, cities, and nations.

The stakes couldn't be higher. For individuals, this represents an unprecedented opportunity to position themselves at the forefront of a technological revolution. For institutions, failure to adapt poses an existential threat. After decades of gestation, we're witnessing the birth of the first true robotics industry, built on breakthroughs in hardware design, software intelligence, and unprecedented alignment of market incentives. The stage is set for a new era of machines that can physically transform the world around us in ways once relegated to science fiction.

To understand the magnitude of this transformation – and to separate genuine innovation from superficial hype – we must map the progression from basic automation to highly autonomous systems. In the next section, we'll explore the Five Levels of Autonomy, a framework that illuminates the path from simple task-specific machines to the versatile, intelligent robots poised to reshape our world.

II. Five Levels of Autonomy

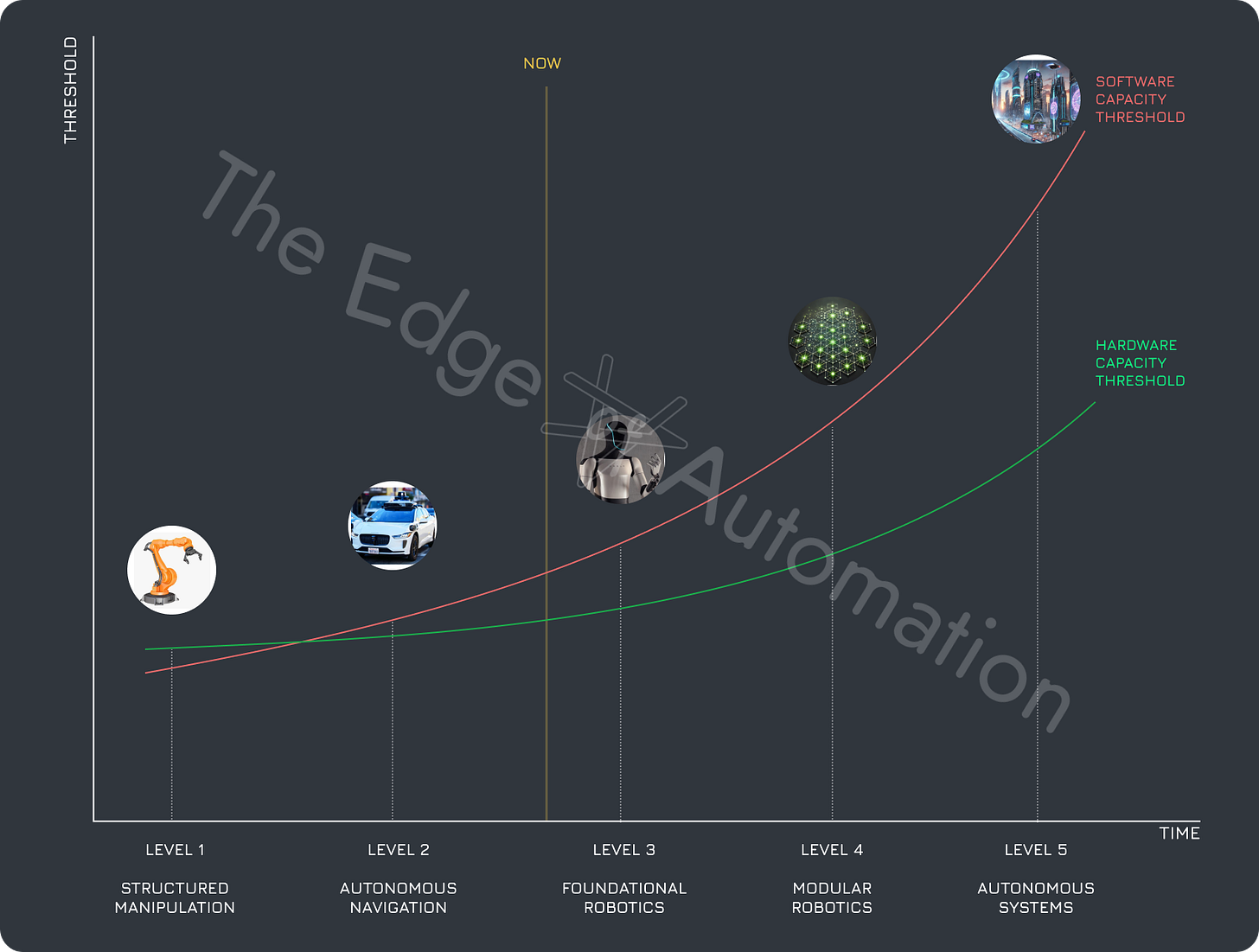

The robotics revolution isn't a sudden leap but a carefully orchestrated progression toward machines that can truly replace human labor. While "autonomy" often appears as an empty buzzword, understanding its spectrum is crucial for grasping why we stand at a unique moment in history. This framework of five distinct levels illuminates not just how far we've come, but why we're finally approaching the birth of a true robotics industry.

These five levels of autonomy are crucial to understanding the robotics revolution. We'll explore each level in greater technical depth in a future article, examining the specific hardware and software breakthroughs that enable progression through this framework.

1. LEVEL 1: Structured Manipulation

The foundation of industrial automation lies in machines that excel at repetitive tasks within tightly controlled environments. Industrial arms can weld, paint, and assemble with remarkable precision, but only because their world is deliberately engineered to eliminate variability. The entire production line must be built around them, with safety fences, conveyor systems, and precise product positioning ensuring they face only predetermined scenarios.

Video: Industrial Arms (Level 1 Autonomy)

While these machines revolutionized manufacturing, their "autonomy" is superficial. They follow rigid scripts that require extensive reprogramming for even minor product variations, and their focus on mechanical precision over adaptive intelligence results in expensive systems that can't handle real-world complexity. This represents everything the coming robotics revolution must overcome: instead of forcing environments to adapt to machines, we need machines that can adapt to human environments. Level 1 Autonomy may have transformed some factories, but falls far short of creating true replacements for human labor.

2. LEVEL 2: Autonomous Navigation

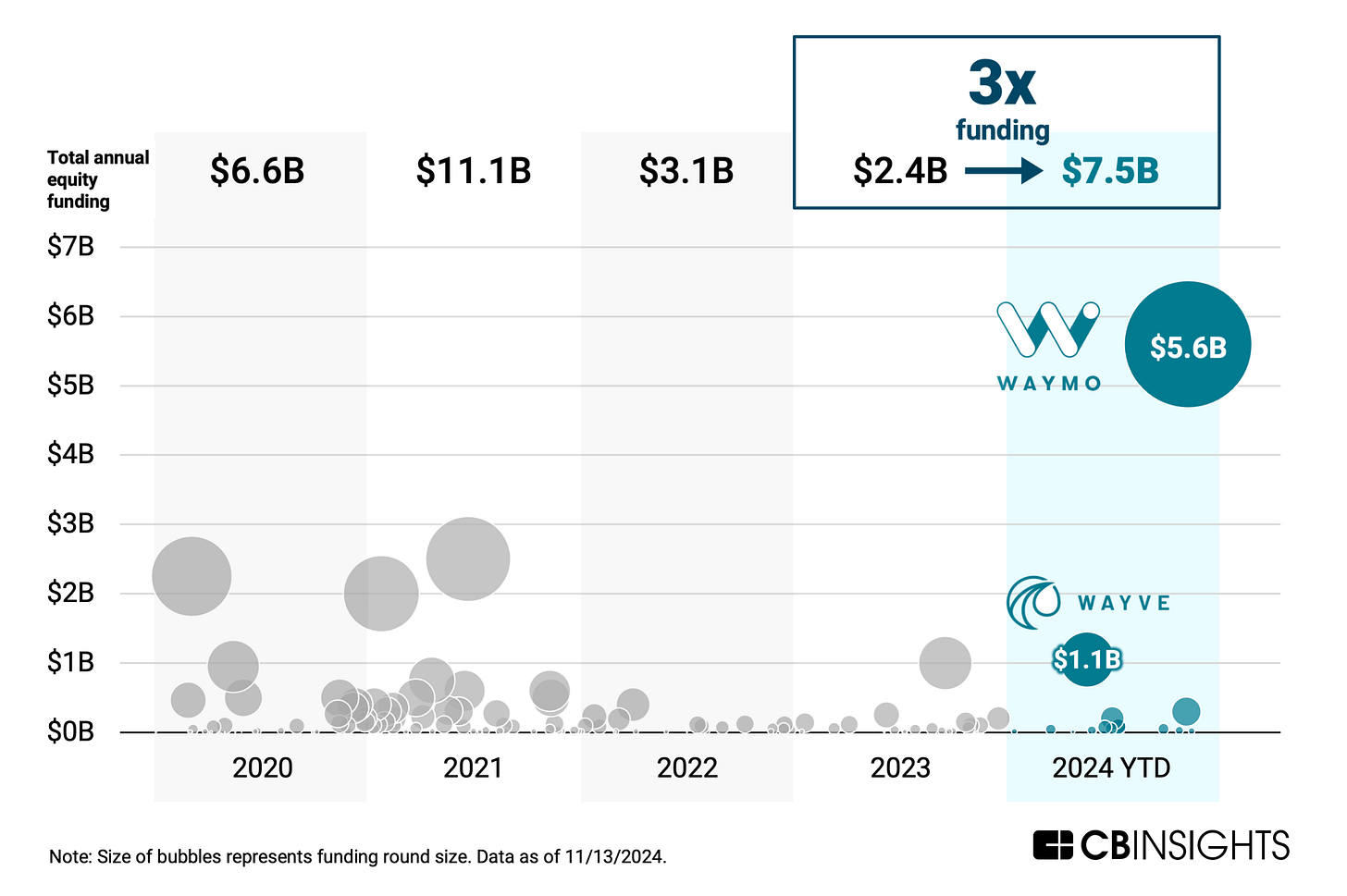

The second level introduces genuine mobility through autonomous vacuums, delivery drones, and self-driving vehicles. These systems use cameras, LIDAR, and GPS to map their environment and navigate dynamic spaces. This leads to a massive complexity increase: processing real-time data and reacting to unexpected obstacles rather than performing fixed actions in controlled settings. The autonomous vehicle industry's repeated setbacks highlight this challenge – even "simple" navigation in unstructured environments demands breakthroughs in perception and decision-making.

Video: Autonomous Vehicle Example by Waymo (Level 2 Autonomy)

Yet Level 2 systems remain severely limited in manipulation. They can transport payloads between fixed points but lack the dexterity needed for manufacturing, maintenance, or household tasks. This fundamental gap between mobility and manipulation explains why Level 2 Autonomy, despite its sophistication, falls short of true robotics. Creating machines that can replace human labor requires unifying these capabilities into single, adaptable systems – a challenge that defines the next level of autonomy and the dawn of real robotics.

3. LEVEL 3: Foundational Robotics

Level 3 Autonomy (Foundational Robotics, or General-Purpose Robotics) marks the beginning of true robotics. This is where navigation and manipulation finally merge, creating machines that can serve as genuine replacements for human labor. These robots combine the mobility to move through spaces with the dexterity to handle diverse objects and tasks, all while adapting to environmental changes with minimal oversight. Unlike specialized systems of previous generations, these general-purpose robots can tackle varied scenarios without hardware modifications, relying instead on adaptable software and versatile physical designs.

General-Purpose Mobile Manipulation (GPMM) represents the technological threshold that sets Level 3 systems apart from their predecessors – the integration point where machines transition from specialized automation to foundational robotics capable of generalized work.

Video: Tesla Optimus Gen 2 (Level 3 Autonomy)

The technological requirements for are immense. Robots must process multiple sensor streams while making split-second decisions about positioning, gripping, and task execution. Physical AI becomes essential, as pre-programmed routines can't handle the complexity of real-world interactions. These systems demand sophisticated models that combine perception, reasoning, and learning into unified frameworks to drive next-generation actuators and sensors for human-like dexterity. The computational requirements involve high-throughput edge processing capabilities that can handle multimodal inputs while maintaining real-time responsiveness. Recent breakthroughs in adaptive control systems and sim-to-real transfer learning have accelerated development, enabling robots to generalize from limited training data to novel situations.

We are entering this phase now. The surge in humanoid robotics and multi-purpose automation signals an unprecedented harmony: AI models sophisticated enough to understand physical interactions, hardware capable of human-like movement, and focused product strategies backed by desperate capital. We're about to see machines that can adapt to human environments rather than requiring environments to adapt to them – a fundamental shift leading to the birth of the true robotics industry. This transformation will reshape every sector of society that previously required human physical labor, from manucacturing and logistics to households and services. Organizations pioneering these systems are positioned to not only establish new platform monopolies but also to reshape the power dynamics of the entire human world.

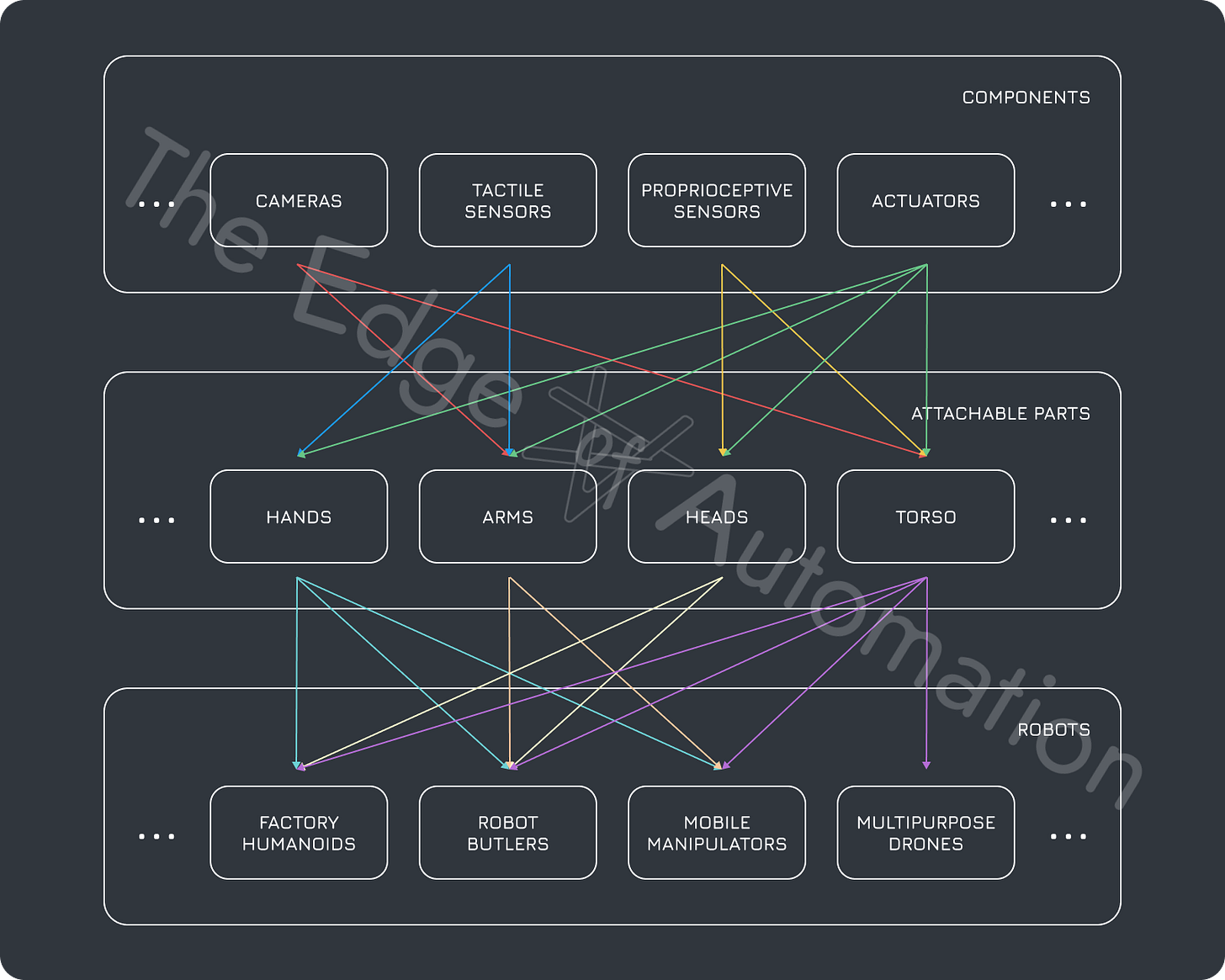

4. LEVEL 4: Modular Robotics

Where Level 3 establishes the foundation, Level 4 creates an ecosystem of plug-and-play components. The focus shifts from vertically-integrated robots to standardized platforms where hardware and software can be mixed and matched like building blocks. Sensors, actuators, and entire limbs become interchangeable modules, while unified architectures enable rapid deployment of new capabilities across diverse form factors.

This modularity transforms the economics of robotics. Instead of each company building complete systems from scratch, specialists can focus on developing best-in-class components that plug into standardized interfaces. The result is accelerated innovation, lower costs, and the emergence of vibrant developer ecosystems – similar to how personal computing exploded once hardware and software were decoupled. As robotics moves from integrated systems to modular platforms, we'll see an explosion of new applications and capabilities that were once thought impossible to achieve.

5. LEVEL 5: Autonomous Systems

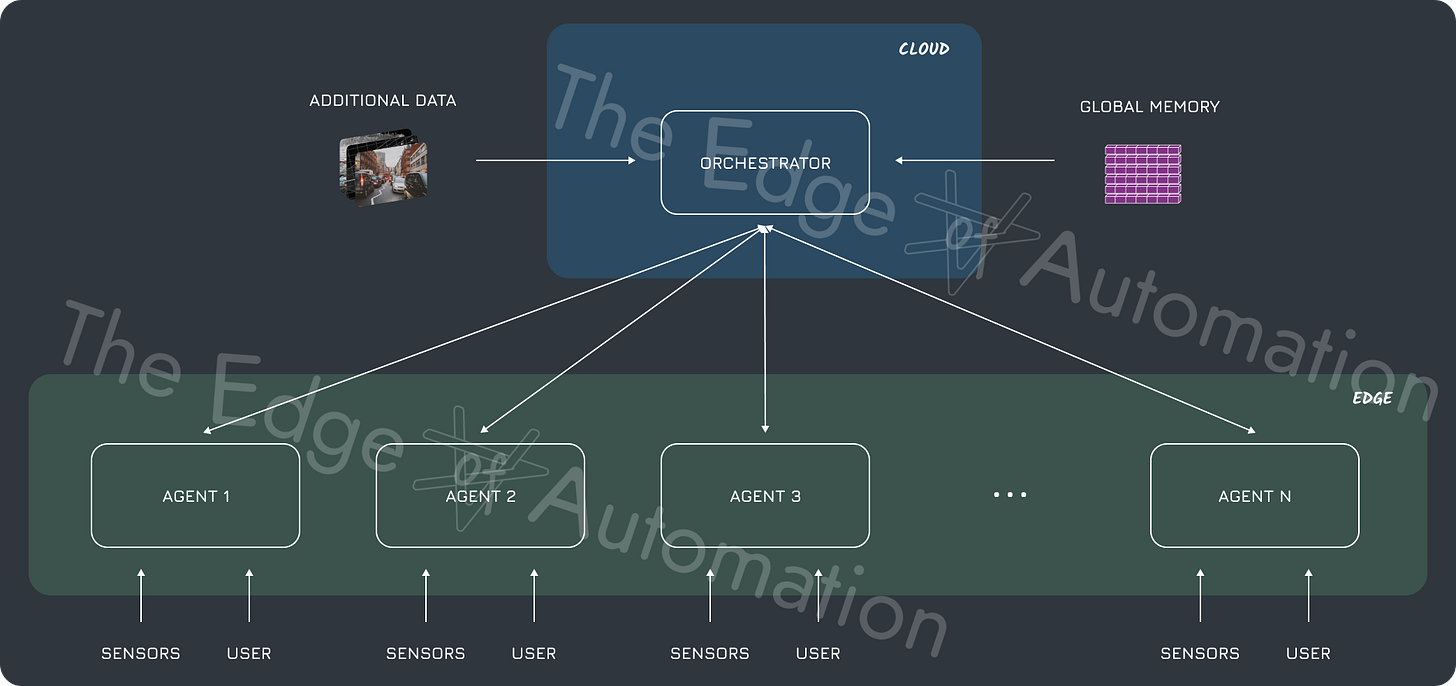

The final level transcends individual machines to orchestrate entire networks of automated infrastructure. Factories, supply chains, and even cities become integrated systems where physical AI coordinates complex operations without human oversight. Raw materials flow through automated production lines, autonomous vehicles manage logistics networks, and robot fleets maintain urban services in a seamless dance of coordinated automation.

This represents civilization's pivot from human-supervised machines to machine-supervised ecosystems. The technological requirements are staggering: advanced simulation capabilities, orchestration AI handling billions of components in real-time, and hardware robust enough for continuous operation. Yet this is the ultimate destination where physical AI doesn't just replace human labor but reimagines how entire societies function.

The progression through these levels reveals why previous attempts at robotics revolution fell short. While Levels 1 and 2 brought valuable but limited automation, the leap to Level 3 demanded simultaneous breakthroughs in hardware, software, and system design that remained out of reach. Now, as these barriers finally fall, we're witnessing the birth of an industry that will fundamentally transform human civilization.

A future article will provide a comprehensive technical analysis of these five levels, including detailed examinations of the hardware configurations, software architectures, and AI models that enable each stage of autonomy.

III. Why It Never Worked: The Perfect Storm of Barriers

For decades, robotics remained trapped in a paradox: billions in investment and countless breakthroughs never translated into machines capable of matching human capabilities. While industrial arms conquered factory floors and autonomous vehicles made headlines, true robotics – the seamless fusion of mobility, manipulation, and intelligence – stayed perpetually out of reach.

The challenges were comprehensive and mutually reinforcing. Hardware remained either too fragile or prohibitively expensive for real-world deployment. Software proved inadequate for the complexity of physical interaction. More fundamentally, the field never developed the cohesive culture needed to attract elite talent and drive commercial innovation. Instead of maturing into an industry, robotics limped along as a collection of isolated research projects and limited demonstrations. Understanding these obstacles is crucial to grasping why we stand at such a pivotal moment today, as these very barriers finally begin to fall.

1. The Curse of Mobile Manipulation

General-Purpose Mobile Manipulation (GPMM) represents the core challenge that kept robotics from achieving human-level capability. Combining autonomous movement with dexterous manipulation opens up exponential complexity. The instant you place an arm on a moving base – or give it legs – you create a system that must simultaneously maintain balance, navigate space, and manipulate objects with precision. This multifaceted challenge requires orchestrating dozens of joints, sensors, and control systems in millisecond-level coordination while accounting for environmental variability that humans instinctively handle. The computational demands alone would overwhelm processors available even five years ago, requiring breakthroughs in both hardware and software architectures.

This complexity manifests in three critical ways. First, robots must build real-time environmental understanding from noisy sensor data, interpreting not just object locations but their "affordances" – whether a handle can be grasped or a surface can support weight. Second, perception, planning, and motor control must work in perfect harmony. Finally, they must adapt instantly to unexpected changes, from shifting object positions to varying surface textures. Each challenge compounds the others, causing failure cascades where a minor perceptual error leads to catastrophic planning mistakes, or where slight motor miscalibration renders sophisticated environmental models useless. The interdependency of these systems created a technological bottleneck that traditional siloed approaches couldn't overcome.

Video: Humanoid Falls during 2015 DARPA Robotics Challenge

Early attempts highlighted these challenges. Honda's ASIMO could walk and gesture but struggled with novel tasks. The 2015 DARPA Robotics Challenge famously ended with humanoids failing at basic tasks like opening doors or recovering from falls. Even advanced prototypes remained brittle; the slightest deviation from expected conditions would cause system-wide failure. These high-profile disappointments revealed how naively optimistic early robotics timelines had been, with engineers underestimating the gap between controlled demonstrations and practical deployment. The sheer engineering complexity drove many promising startups into bankruptcy as they exhausted funding before achieving commercially viable capabilities.

The fundamental barrier was high-dimensional uncertainty. A mobile manipulator must track its location, monitor dynamic objects, plan complex movements, and maintain stability, all while processing noisy sensor data in real-time. Until recently, no integrated platform could handle this combinatorial explosion of complexity, leaving truly capable general-purpose robotics perpetually out of reach. This explains why factory floors remained rigidly structured for decades: simplifying environments was the only viable approach when machines couldn't adapt to natural variation. As a result, entire industries remained dependent on human dexterity despite massive investments in automation, leading to a technological ceiling that appeared insurmountable until the convergence of advanced AI and hardware finally shattered these longstanding barriers.

2. Hardware's Glass Ceiling: Sensors and Actuators

Hardware lies at the heart of robotics' long-standing limitations. Even the most sophisticated prototypes were hamstrung by fragile sensors, inefficient actuators, and power systems that couldn't sustain extended operation. These hardware constraints weren't just technical hurdles – they formed an impenetrable ceiling that relegated robots to sterile, structured workspaces and tightly orchestrated production lines, preventing the emergence of the versatile, adaptable machines needed for real-world applications.

2.1. Sensors

For robots to match human capabilities, they need sensor performance rivaling our own biological systems. While recent years have seen incremental improvements in machine vision and tactile sensing, the majority of sensor technology remained too limited, costly, or fragile for large-scale deployment.

2.1.1. Vision

Video: Why Computer Vision is a Hard Problem for AI

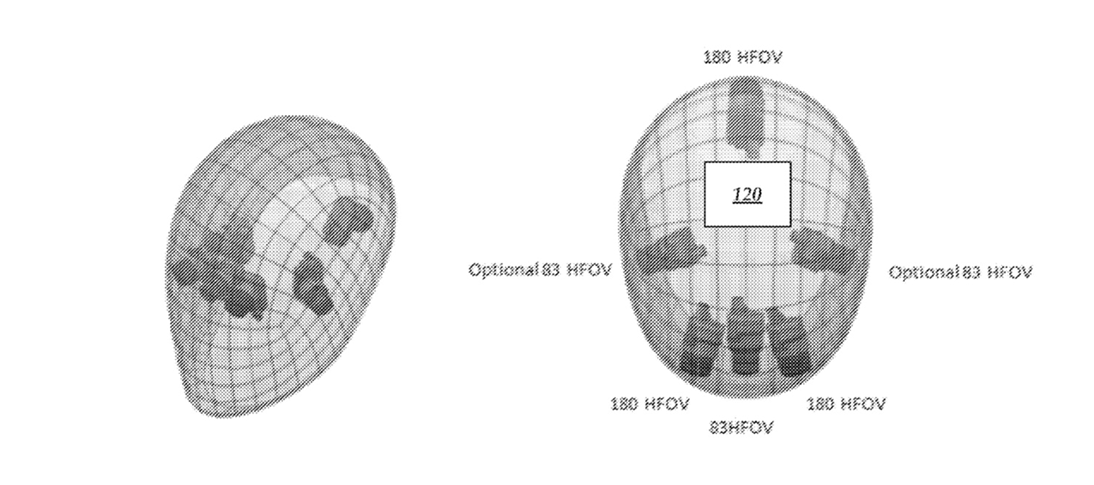

Vision systems, the cornerstone of perception, faced three critical limitations. First, camera hardware struggled with resolution, field of view, and latency. While humans seamlessly integrate peripheral and foveal vision, robots required multiple cameras for comparable coverage – driving up cost, power consumption, and processing overhead. Second, depth sensing remained a persistent challenge. Early 3D sensors were bulky and unreliable, forcing engineers to cobble together combinations of stereo cameras, structured light sensors, and LIDARs – each with significant trade-offs. Finally, real-world conditions like rain, snow, and dust significantly impacted the quality of visual data, affecting robots’ ability to interpret their surroundings.

2.1.2. Touch and Tactile

Video: Tactile Sensing Lecture

The gap between human and robot touch sensing was even more stark. Human hands contain thousands of mechanoreceptors providing continuous feedback on texture, pressure, and temperature. Replicating this sensory network proved extraordinarily difficult. High-density tactile arrays remained prohibitively expensive and fragile, while attempts at "robot skin" failed under normal wear and environmental stresses. Even when partial solutions emerged, they generated torrents of data that overwhelmed early processing systems. This absence of reliable tactile feedback forced robots to rely almost exclusively on vision and crude force sensing, making fine manipulation virtually impossible.

2.1.3. Proprioception

Video: Motion Capture vs Proprioception

Proprioception – the internal sense of body configuration and movement – represents another crucial capability that early robotics couldn't replicate. While humans possess specialized receptors throughout our musculoskeletal system, robots relied on basic accelerometers, gyros, and joint encoders prone to noise and drift. This limited sensor suite made precise balance and dynamic manipulation difficult, especially when robots needed to adjust their center of gravity while handling objects. High-fidelity proprioceptive systems existed but remained too expensive and power-hungry for practical deployment, forcing most designs to fall back on heavily scripted movements.

2.1.4. Force and Torque

Force and torque sensing provides critical feedback for physical interaction. Multi-axis force/torque (F/T) sensors were sensitive, fragile, and required constant recalibration. Their bandwidth limitations often forced compromises in joint design, while their high maintenance burden restricted them to specialized applications. This left most platforms with minimal force awareness – leading to clumsy manipulation, frequent hardware damage, and safety concerns for human-robot collaboration.

Video: Why You Need Torque Sensors

Collectively, these sensor limitations formed a formidable barrier to achieving human-level autonomy. Without reliable vision, touch, proprioception, and force sensing working in concert, robots remained confined to highly structured environments where their limited perception could be compensated for. Only recently have advances in sensor technology, coupled with breakthroughs in processing capabilities, begun to crack this longstanding ceiling.

Collectively, these sensor limitations formed a formidable barrier to achieving human-level autonomy. Without reliable vision, touch, proprioception, and force sensing working in concert, robots remained confined to highly structured environments where their limited perception could be compensated for. Only recently have advances in sensor technology, coupled with breakthroughs in processing capabilities, begun to crack this longstanding ceiling.

2.2. Actuators

Actuators are the muscles of a robot that convert energy into motion. Their limitations have arguably been the most rigid ceiling in robotics’ long pursuit of human-level dexterity. While sensors shape how robots perceive the world, actuators define their ability to act within it – and for decades, no actuator technology could match the power density, efficiency, and adaptability of human muscle tissue.

2.2.1. Power Density and Efficiency

The fundamental challenge was achieving strength without compromising mobility. Early humanoids faced an impossible dilemma: powerful but heavy hydraulic systems that drained energy reserves, or lightweight electric motors that lacked the torque for dynamic movement. Boston Dynamics' Atlas demonstrated the trade-offs perfectly – its hydraulic actuators enabled impressive demonstrations but required a massive power pack that limited practical deployment.

Video: Hydraulic vs Electric Actuation in Robotics

The efficiency problem compounded these limitations. Every watt wasted as heat meant larger cooling systems and heavier battery packs, creating a vicious cycle that kept robots tethered to external power sources or limited to brief demonstrations. This energy constraint alone made continuous operation impossible, forcing teams to compromise between power and endurance in ways that human workers never face.

2.2.2. Compliance and Safety

Even with sufficient power, rigid actuators proved dangerously unsuited for human environments. The slightest miscalculation in force control could damage hardware or injure nearby workers. While humans naturally adapt their muscle tension to different tasks, robots needed complex mechanical and software solutions to achieve similar compliance.

Traditional actuators – whether electric or hydraulic – lacked inherent "backdrivability”, the ability to yield when encountering resistance. Series elastic elements offered a partial solution but introduced their own challenges in control precision and durability. Without reliable compliance, robots remained confined to safety cages or forced to move so cautiously that their practical value evaporated.

2.2.3. Durability and Cost Effectiveness

The final barrier was economic: actuators robust enough for continuous operation remained prohibitively expensive for mass deployment. Soft tissues and high-precision components degraded quickly under real-world stress, while more durable alternatives sacrificed the fine control needed for complex manipulation.

Video: The Power of Soft Robotics

This cost barrier created a paralyzing feedback loop: low production volumes kept prices high, which limited adoption, which in turn prevented the economies of scale needed to make better actuators affordable. Until this cycle could be broken, the dream of ubiquitous robotics remained economically unfeasible, regardless of other technological advances.

The actuator problem proved as fundamental as the sensor challenge: even perfect perception remains useless if a robot can't move fluidly, handle objects securely, or operate for more than a few minutes without failing. Whether from insufficient power density, lack of compliance, or prohibitive costs, these hardware limitations were enough to stall early robotics efforts before they could even tackle advanced software challenges.

3. Software Bottleneck: From AI to Infrastructure

Even if perfect hardware had appeared overnight, robotics would have remained trapped by software limitations. The field struggled across three critical domains: adaptive intelligence (Physical AI), deployment platforms (infrastructure), and professional-grade tooling (ecosystem). These software gaps made it difficult for robots to effectively perceive and interact with the world.

3.1. Physical AI

Physical AI represents the intelligence layer that enables robots to perceive, reason about, and operate in unstructured real-world settings. Traditional robotics faced fundamental constraints in sensing, planning, and control that limited adaptation to complex, dynamic environments.

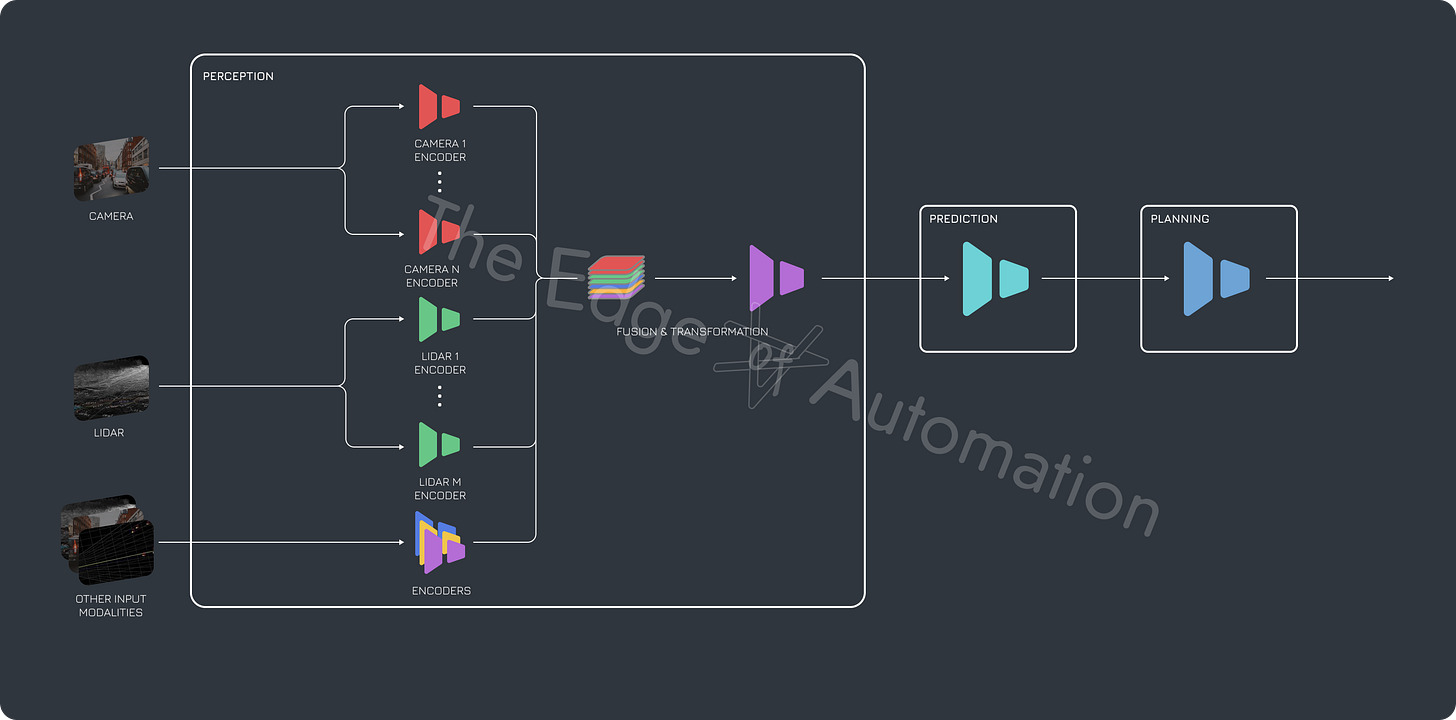

3.1.1. AI Models

The AI architecture powering most robots reflected a critical misunderstanding of what true autonomy requires. Instead of unified intelligence, teams cobbled together constellations of small, specialized models, each trained for an isolated task or specific sensor type. A typical autonomous vehicle might deploy separate neural networks meticulously tuned for front, side, and rear cameras, stitching their outputs together with brittle rule-based logic. This disjointed design created sharp discontinuities in robot behavior during critical moments. A vehicle might recognize pedestrians from the front camera but fail when they appeared in peripheral views, making it impossible to handle novel scenarios or generalize learning across interconnected real-world situations.

This architectural fragmentation spawned a maintenance nightmare that devoured engineering resources and created paralyzing technical debt. Each specialized model required its own training pipeline, evaluation metrics, and deployment infrastructure. Dependencies between systems grew exponentially complex, with updates to one component frequently causing cascading failures across seemingly unrelated modules. Teams found themselves trapped in endless cycles of retraining and recalibration, debugging interactions between models rather than advancing core capabilities. The result was robots that fell apart when facing the fluid challenges of cluttered human environments – precisely the scenarios defining useful real-world deployment.

The problem penetrated deeper than suboptimal architectural choices. While the broader AI community moved toward large foundation models capable of handling multiple modalities, robotics remained stuck in an outdated paradigm of narrow, task-specific networks. This isolation from mainstream AI progress proved costly as innovative architectures revolutionized fields from computer vision to natural language processing, resulting in a widening capability gap that left robotics years behind the cutting edge. While robots required the most sophisticated reasoning to navigate physical reality, they were paradoxically equipped with the least advanced AI models available, virtually guaranteeing their inability to achieve human-level adaptability.

3.1.2. Data

The data problem proved equally crippling. Unlike web companies that could harvest millions of user interactions with minimal cost, robotics teams faced formidable barriers to collecting the diverse, high-quality data needed to train robust AI models. Physical data gathering demanded operating expensive, fragile hardware in real environments – a slow and resource-intensive process that yielded relatively small datasets. This limitation was exacerbated by the industry's fragmentation: without standardized sensor configurations or shared data formats, even the limited data collected remained siloed within individual research groups or companies, preventing the pooling that might have accelerated progress.

Video: Can Robotics Overcome Its Data Scarcity Problem?

This data scarcity hit hardest when attempting sophisticated learning approaches like reinforcement learning, which might require billions of interactions to master complex tasks. The absence of high-fidelity simulation frameworks left teams unable to supplement scarce real-world data with synthetic examples. With no viable path to acquire sufficient training data, most robots defaulted to hand-crafted rules or simple models trained on woefully inadequate datasets. Data poverty condemned early robotics systems to rigid, preprogrammed behaviors that collapsed when confronting the complexity of real-world environments, creating a seemingly insurmountable barrier to developing machines with genuine adaptability.

3.1.3. Deployment

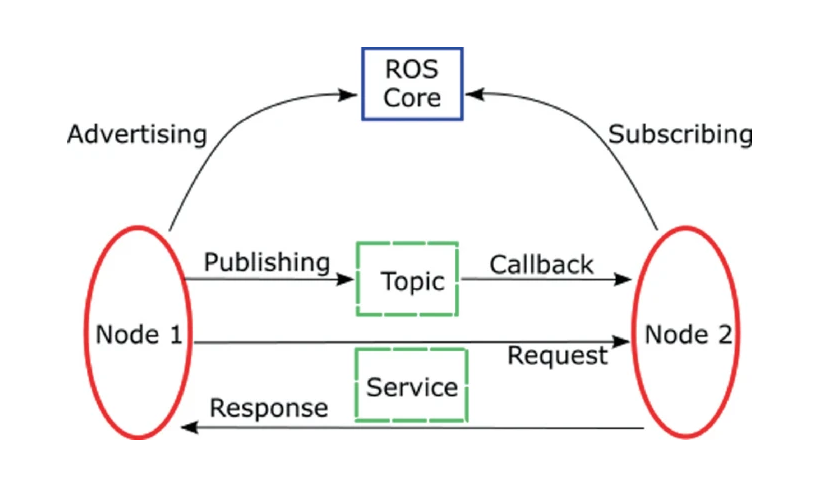

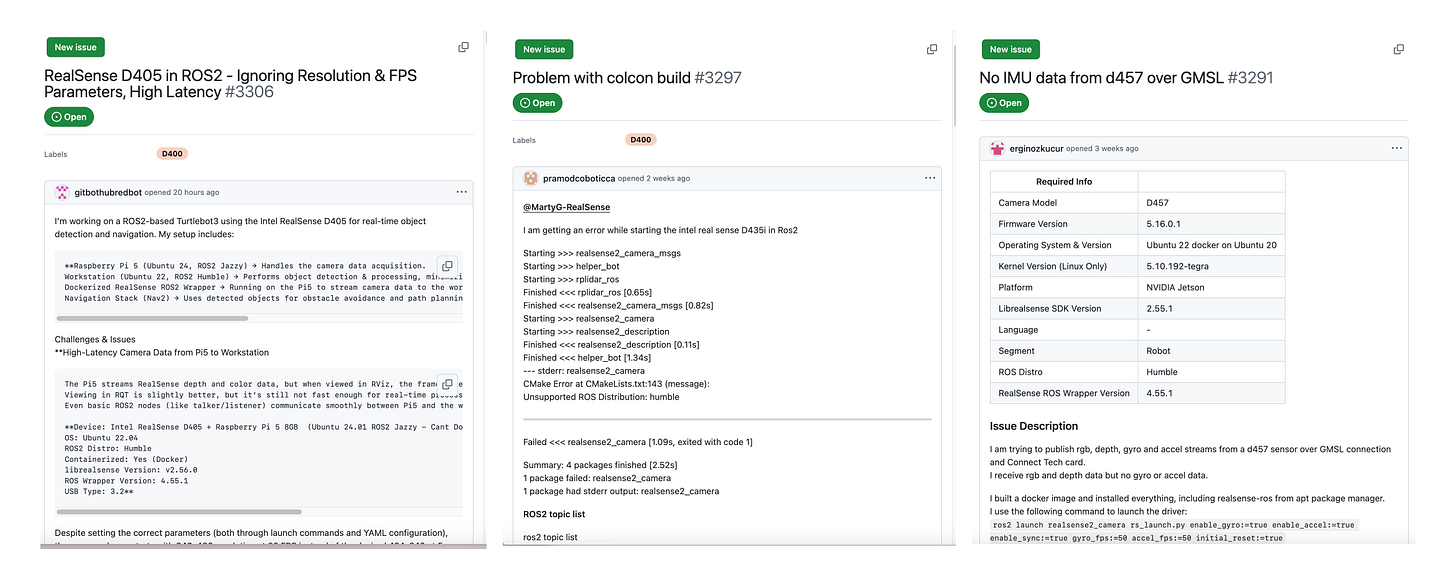

Even when teams managed to develop working AI models, they faced a final hurdle: no mature platform existed for shipping complex intelligence onto physical robots. ROS (Robot Operating System) promised a universal framework but exposed critical flaws in practice. Its TCP-based interprocess communication architecture induced latency spikes and memory thrashing, while attempts to optimize through "nodelets" introduced new layers of bugs and complexity.

The containerization wave that revolutionized web services actually compounded these problems in robotics. Hardware drivers frequently broke inside containers, forcing teams to adopt brittle workarounds. Container images ballooned to tens of gigabytes as they accumulated NVIDIA drivers, CUDA libraries, and other dependencies. Without a robust, standardized platform, serious robotics companies either forked ROS into proprietary variants or abandoned it entirely – further fragmenting the ecosystem and preventing the emergence of shared best practices.

3.2. Infrastructure

Beyond AI models and algorithms, robotics demanded robust infrastructure to support deployment at scale – yet this foundation remained conspicuously absent. Three critical gaps prevented even promising prototypes from reaching commercial viability: the inability to port software across platforms, insufficient compute power for real-time operation, and unreliable hardware interfaces that made integration nearly impossible.

3.2.1. Software Portability

The dream of modular, reusable robotics software collided with harsh reality: even simple components proved nightmarishly difficult to port between platforms. Each robot required a unique combination of sensor configurations, hardware drivers, and control systems. What worked perfectly in one setting would fail catastrophically in another, forcing teams to rebuild basic functionality from scratch with every new deployment.

Video: Installing Sfotware and Preparing the Robot (Highlights Challenges in Software Portability)

Open-source efforts like ROS promised standardization but fell short in practice. Their packages, designed primarily for research and hobbyist use, buckled under commercial demands. Some companies responded by building proprietary frameworks, but this only accelerated fragmentation. Dependency management became a nightmare, and any attempt to upgrade one library would trigger cascade failures across entire software stack. The field became trapped in an endless cycle of rewrites and incompatibilities that made scaling impossible.

3.2.2. Compute

Video: Efficient Computing for Low-Energy Robotics

Real-time processing of sensor data and AI models demanded massive computational power, yet mobile robots couldn't afford the size, weight, or energy costs of traditional high-performance computing. Standard GPUs drained batteries in minutes while generating enough heat to require bulky cooling systems. The absence of powerful robotics processors meant teams had to choose between underpowered capabilities or power-hungry server components never designed for mobile use. This compute bottleneck effectively capped the sophistication of onboard AI, keeping robots from achieving the fluid intelligence needed for human-like operation.

3.2.3. Drivers and Hardware Interfaces

The software interface between high-level control and physical hardware remained perpetually unstable. Hardware vendors provided poorly maintained, proprietary drivers that lacked modern features and documentation. Critical tasks like sensor calibration or motor tuning became multi-week ordeals requiring deep expertise in vendor-specific protocols.

When firmware updates inevitably broke existing integrations, teams had to reverse-engineer interfaces or write new drivers from scratch. This fragile layer demanded constant maintenance, draining the already-scarce resources of robotics companies. Without reliable ways to interface with hardware, even the most sophisticated AI remained trapped in imagination.

3.3. Ecosystem and Tooling

Beyond core technology challenges, robotics suffered from a severely underdeveloped ecosystem of development tools. This gap didn't just slow progress – it systematically prevented the field from achieving the reliability and scalability needed for commercial deployment.

3.3.1. High Stakes, Low Quality

The inherent physical risks of robotics demanded enterprise-grade software quality, yet the field's tooling remained perpetually immature. Most open-source packages emerged from research labs or hobbyist projects, lacking systematic testing, documentation, or professional maintenance – a reality starkly illustrated by the near-total absence of unit tests in most robotics packages.

Without major tech companies fully committed to maintaining these projects, critical infrastructure remained dangerously unstable. When enterprises attempted to adopt these tools, they often discovered severe bugs only after months of integration work, forcing them to retreat into costly vertical integration.

3.3.2. Lack of Visualization and Observability

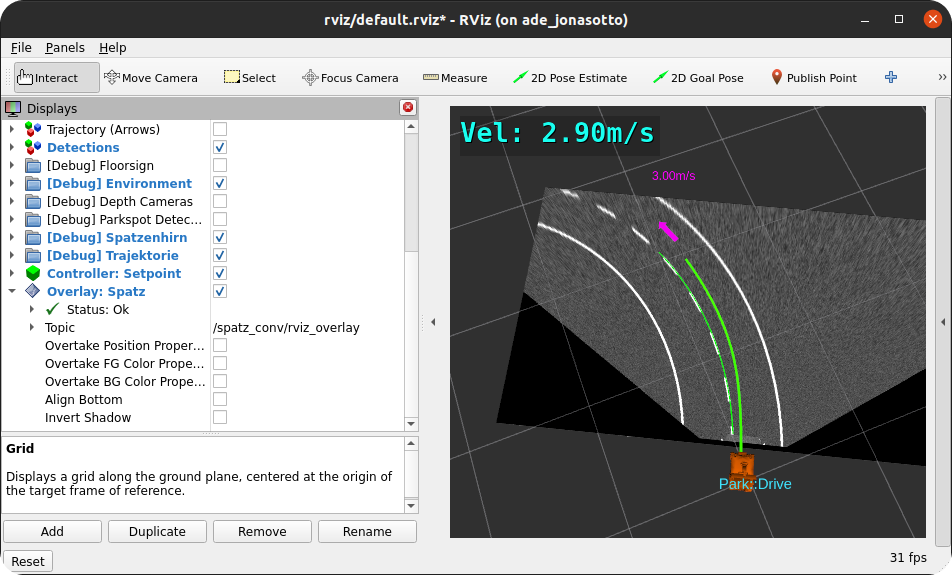

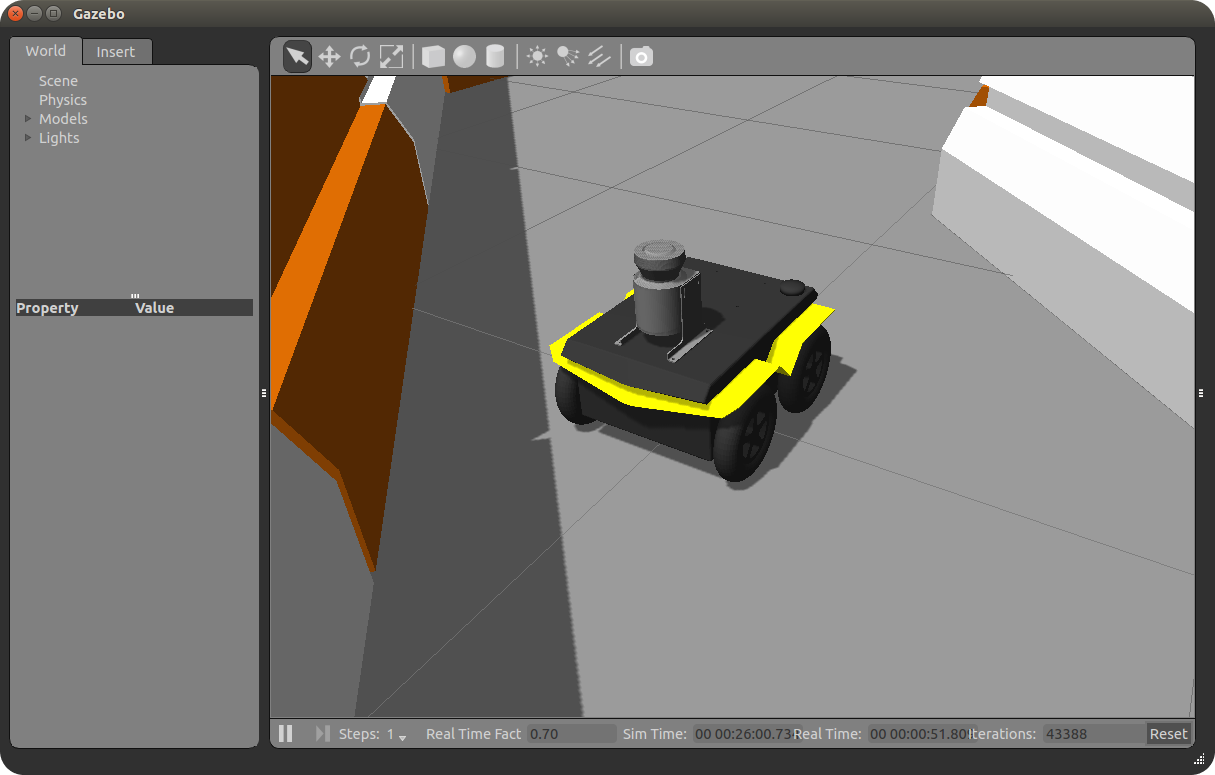

Developing autonomy systems requires interpreting complex streams of sensor data while diagnosing decisions made by multiple AI and control processes in real-time. Yet the field lacked robust tools for visualization, debugging, and system introspection. Popular frameworks like RViz offered only basic 3D overlays, while newer platforms like Foxglove missed crucial features for deep system observability until recently. This tooling gap made it challenging to effectively tune long-horizon action planners, diagnose sensor issues, or correlate complex system behaviors – turning basic development tasks into lengthy debugging ordeals.

3.3.3. Lack of Realistic Simulators

Perhaps the most crippling ecosystem gap was the absence of high-fidelity simulation platforms. While autonomous vehicles had partial solutions like CARLA and Applied Intuition, general-purpose robotics had no equivalent. Gazebo attempted to fill this role but lacked realistic physics, sophisticated sensor emulation, and industrial-grade stability. Unity's industrial simulation tools never reached the sophistication needed for serious AI development. Without reliable simulators, teams couldn't effectively use reinforcement learning or other data-intensive techniques, as real-world training proved too costly and dangerous. This limitation alone kept advanced AI approaches perpetually trapped in proof-of-concept stage.

The software bottleneck proved as fundamental as any hardware limitation in keeping robotics trapped in laboratories. No matter how sophisticated the mechanical platform, fragmented AI models and immature development tools made reliable deployment impossible. Only the recent emergence of unified intelligence architectures, robust simulation frameworks, and professional-grade infrastructure has finally shattered this ceiling, enabling the software maturity needed for true robotics.

4. Fragmented Landscape: Missing Industry Foundation

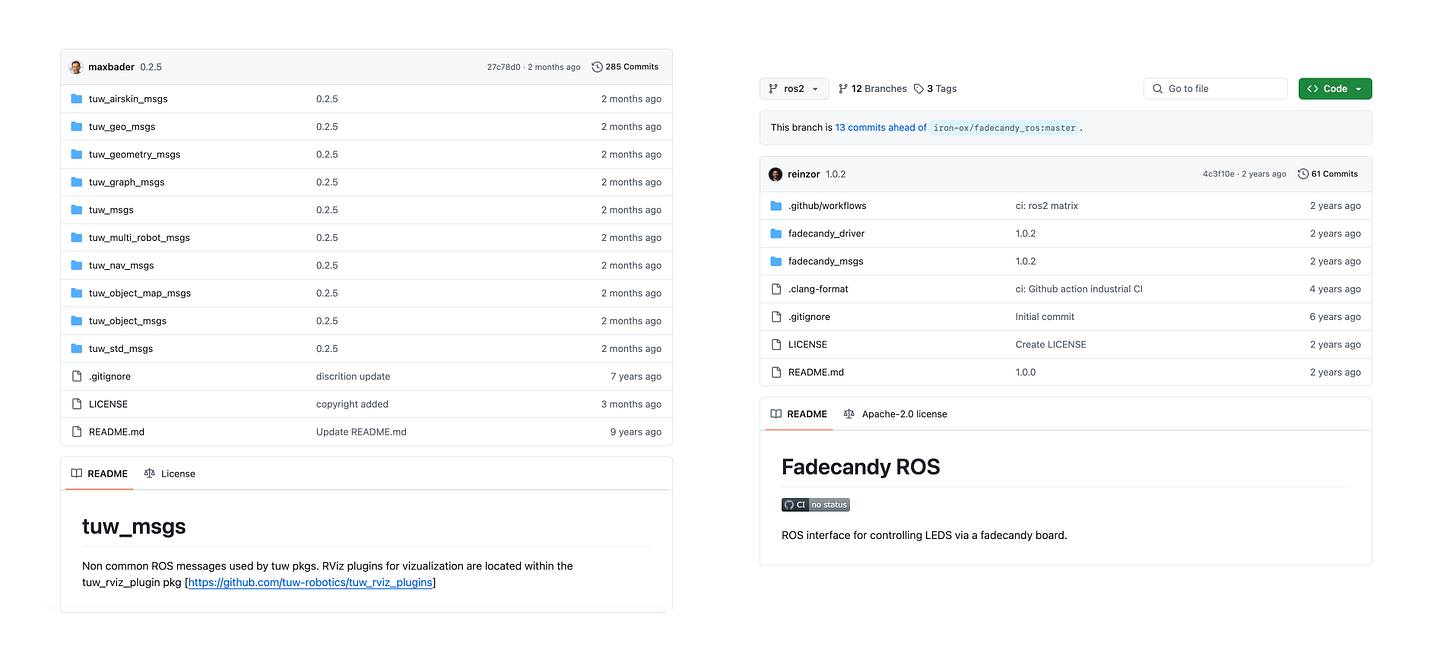

While other technologies like personal computers and smartphones flourished through shared standards, robotics remained trapped in a maze of incompatible hardware and software. The inability to establish standardized platforms is one of its most crippling structural failures.

4.1. Lack of Hardware Standardization

The absence of standardized hardware interfaces created a paralyzing feedback loop. Each manufacturer developed proprietary components – from custom motor controllers to specialized end effectors – that worked only within their ecosystem. Even basic elements like power supplies and communication protocols varied wildly between vendors. This fragmentation made it nearly impossible to mix components from different suppliers or upgrade individual parts without replacing entire subsystems.

The cost implications were severe. Without standardization, every new robot became a full-blown engineering project requiring custom adaptors, firmware modifications, and mechanical interfaces. The resulting complexity drove up both development time and unit costs, keeping robotics perpetually confined to specialized, high-margin applications. More fundamentally, the lack of standard hardware platforms prevented the emergence of the specialized component suppliers and economies of scale that had revolutionized other industries.

4.2. Lack of Software Compatibility

Software fragmentation proved equally damaging. Major manufacturers like ABB, Fanuc, and KUKA each maintained their own proprietary programming languages and development environments. Code written for one platform required complete rewrites to run on another, creating massive inefficiencies and preventing the emergence of a shared knowledge base. The open-source projects that attempted to bridge this gap were too academically focused and lacked the enterprise-grade reliability needed for commercial deployment

The absence of standardized software had far-reaching consequences. Instead of standing on the shoulders of giants, each team had to build their own ecosystem from scratch, draining resources that could have gone toward advancing Physical AI. This constant reinvention of basic functionalities prevented the formation of the shared development ecosystem that had accelerated progress in fields like web services and mobile applications.

This dual fragmentation of hardware and software created a systemic barrier to scaling. Without standardized platforms, innovations remained trapped in isolated silos, unable to spread through the broader community. The field became locked in a cycle where each new project started from scratch, preventing the accumulation of technological momentum. This lack of unified foundation kept robotics trapped as a collection of isolated achievements, forever short of evolving into an industry robots capable of delivering human-level autonomy.

5. Cultural Barriers: Why Innovation Stalled

Beyond technical hurdles, robotics was held back by deep-seated cultural limitations that systematically prevented innovation. The field never developed the commercial mindset needed to bridge the gap between prototypes and viable products. These cultural barriers proved especially damaging as they prevented the convergence of advances in hardware, software, and AI needed for general-purpose autonomy.

5.1. Lack of Product Focus

The robotics field remained trapped in a demonstration mindset, prioritizing minor achievements over commercial viability. Research labs and hobbyists built impressive prototypes that could fold laundry or fetch drinks but rarely developed product roadmaps addressing real market needs. Even well-funded projects like Willow Garage's PR2 lingered in demo mode until resources ran dry, leaving behind shelved experiments rather than industrial momentum.

Boston Dynamics exemplified this pattern, pursuing ambitious visions of humanoid robots without a clear path to revenue. Their mesmerizing demonstrations felt more like science fair exhibitions than steps toward mass deployment. This stands in stark contrast to newer entrants like Tesla and Figure that prioritize sleek, user-friendly designs with a tangible goal: deploying robots people will actually buy.

5.2. Hardware-Bound Mindset

The field remained fixated on electromechanical perfection while neglecting the crucial AI layer needed for real-world adaptability. Founders with strong hardware backgrounds often treated software as an afterthought, assuming intelligence could be naturally added once physical systems were sound. This "build it and brains will follow" mentality repeatedly doomed projects, leaving them stranded without the AI to match.

Video: “Robots Are About Actuators, Not AI” (Incorrect)

Despite the clear rise of deep learning, teams were slow to embrace advanced software capabilities. When their machines ventured into unstructured environments, they quickly hit the limits of rigid rule-based control and underpowered AI models. The missing breakthroughs weren't about mechanical wizardry but enabling machines to perceive and reason their world.

5.3. Territorialism and Talent Deficiency

Robotics suffered from both a talent drain and cultural insularity. While building commercially-viable robots demands elite skills across mechanical, electrical, and software domains, the field struggled to attract and retain top-tier engineers. These specialists often gravitated toward better-funded roles in software, consumer electronics, or AI research at tech giants.

The old-guard robotics community's territorial attitude further compounded this problem. Ambitious software and AI specialists – crucial for next-generation autonomy – found the environment unwelcoming and moved on. Only recently has a fresh wave of interdisciplinary teams, often led by veterans from consumer hardware and AI powerhouses, begun attracting the world-class talent necessary for breakthrough innovation.

5.4. Misaligned Incentives

The financial ecosystem surrounding robotics created impossible tensions between long-term development needs and short-term return expectations. Investors, dazzled by viral demos, would funnel money into robotics startups only to demand rapid returns that clashed with the painstaking reality of building reliable machines. After years of sunk capital without revenue, these backers often withdrew, triggering boom-bust cycles that derailed promising research.

Corporate involvement complicated matters further. Major manufacturers sometimes treated robotics initiatives as hedging bets rather than core commitments. In some cases, success in robotics could undercut their existing business lines, creating unspoken incentives to keep ambitious autonomy projects on a tight leash. Without patient capital and coherent strategic backing, many promising ventures collapsed just when they needed the most resources to transition from prototype to product.

These cultural barriers – lack of product focus, hardware-centric thinking, territorialism, and misaligned incentives – proved as formidable as any technical challenge. The field remained fragmented and underpowered, surviving on sporadic sparks of interest rather than sustained collaboration. Only now, as global forces demand practical automation and AI finally offers robust perception and reasoning, have we seen the perfect storm of talent, capital, and ambition necessary to deliver on robotics' true promise.

IV. What’s Different Now: Gearing Up for Transformation

Despite billions in investment and countless breakthroughs, no one managed to create machines capable of matching human capabilities. Today, this stalemate is finally breaking. A perfect combination of breakthrough hardware, sophisticated AI architectures, and desperate market pressure has transformed robotics from perpetual research into imminent reality. What once seemed decades away now stands at the threshold of mass deployment.

1. Hardware for Human-Level Dexterity

Robots need hardware that matches our innate dexterity, resilience, and efficiency – a challenge that has, until recently, stalled every serious effort at general-purpose automation. Today, however, sensor and actuator technologies are finally catching up, driven by massive research investments and demanding product roadmaps. From high-fidelity vision and tactile arrays to power-dense electric actuators and flexible "robot muscles," we're witnessing the birth of a hardware ecosystem capable of supporting true physical AI.

1.1. Sensor Breakthroughs

Recent advances in vision, touch, proprioception, and force sensing have enabled machines to perceive and interact with unstructured environments at human-like fidelity. These capabilities shatter the constraints that once kept robots trapped in carefully controlled factory floors.

1.1.1. Vision

Vision systems have undergone a fundamental transformation, moving beyond simple cameras to integrated perception suites that rival human spatial awareness. Modern humanoids combine multiple sensor modalities – high-resolution cameras, structured light sensors, and compact LiDAR – to achieve comprehensive environmental understanding. Tesla's Optimus leverages automotive-grade vision systems for near-360° coverage, while Figure's latest platform integrates depth sensing and peripheral vision in a form factor suitable for mass production.

This leap in capability isn't just about better hardware. The integration of advanced AI processing enables robots to extract semantic meaning from raw visual data in real-time, understanding not just where objects are but how they can be manipulated. This fusion of robust sensors with sophisticated perception models enables the fluid, adaptive behavior essential for true labor replacement.

1.1.2. Touch and Tactile

The emergence of viable tactile sensing is another critical breakthrough. New optical tactile sensors transform touch into an imaging problem, enabling robots to detect subtle surface features, pressure distributions, and slip conditions. GelSight's evolution from lab prototype to commercial product exemplifies this shift – their latest sensors provide high-resolution contact mapping in robust, finger-sized packages suitable for industrial deployment.

More fundamentally, distributed tactile arrays are becoming standard features in advanced manipulators. Chinese firm PaXini's DexH13 hand incorporates thousands of integrated sensors, while Meta's ReSkin technology demonstrates how magnetic sensing can enable flexible, durable "electronic skin". These advances finally give robots the fine touch feedback needed for delicate manipulation tasks that were once exclusive to human workers.

1.1.3. Proprioception

Internal awareness – the ability to precisely track joint positions and body configuration – has evolved from a limiting factor into a solved engineering challenge. Modern humanoids integrate high-resolution encoders with distributed inertial measurement units (IMUs), enabling the kind of fluid, coordinated movement that human tasks demand. This proprioceptive foundation proves especially crucial for bipedal robots, where instant balance adjustments must account for changing loads and surfaces.

Video: UBTECH’s New Humanoid Robot, Walker S1

UBTECH's Walker demonstrates how mature this technology has become, with over 40 joints providing continuous force and position feedback. These systems maintain stability through rapid micro-adjustments, matching human-like adaptability in dynamic environments. The miniaturization and cost reduction of these sensors has transformed proprioception from an expensive luxury into a standard feature of commercial platforms.

1.1.4. Force and Torque

Force sensing completes the sensory foundation needed for human-level manipulation. Compact six-axis force/torque (F/T) sensors, once confined to research labs, now enable precise control of interaction forces in industrial settings. Tesla's Optimus uses this capability to manage variable loads during assembly tasks, while newer humanoids incorporate distributed force sensing throughout their structure for more natural human-robot collaboration.

This breakthrough in force perception enables "soft robotics" – machines that can yield appropriately when encountering resistance rather than rigidly following programmed paths. The ability to modulate force output while maintaining precision transforms robots from dangerous industrial equipment into collaborative tools suitable for shared human workspaces.

Advances in sensor technologies – vision, touch, proprioception, and force sensing – finally provides robots with the comprehensive environmental awareness needed to replace human labor. More importantly, these advances have crossed the threshold from research curiosities to robust, commercially viable systems suitable for mass production. This sensory foundation, combined with the actuator and compute breakthroughs we'll explore next, completes the hardware picture needed for general-purpose autonomy.

1.2. Actuator Breakthroughs

The quest for human-like motion has historically forced stark compromises between power, efficiency, and safety. Today's actuator revolution dismantles these trade-offs through innovations across multiple frontiers, enabling the fluid, adaptive movement essential for true labor replacement. This breakthrough spans four critical domains, each marking a decisive advance beyond traditional limitations.

1.2.1. Advances in Electric Actuators

The industry's shift to electric actuation signals more than a technical preference – it unites manufacturing scalability with performance levels once exclusive to hydraulic systems. Tesla's custom actuators for Optimus exemplify this shift: high-torque density motors paired with advanced thermal management enable burst power matching hydraulic systems while maintaining the reliability needed for continuous operation. Even Boston Dynamics, long wedded to hydraulics for dynamic movement, has announced a transition to electric drives, citing superior maintainability and control precision.

Video: Tesla’s Robot Actuators

This electric renaissance stems from breakthroughs in materials and manufacturing. New rare-earth magnetic compositions enable smaller, more powerful motors, while advances in power electronics deliver precise control at higher voltages. The result is actuators that can match human muscle performance while remaining light enough for mobile platforms – a cornerstone capability for viable humanoid deployment.

1.2.2. Mechanical Architecture Innovations

Innovations in mechanical architecture are overcoming the limitations of conventional rotary drives. Apptronik's breakthrough with linear actuators demonstrates how rethinking basic mechanical principles can yield transformative capabilities – their direct-drive approach delivers unprecedented force control while dramatically improving impact resistance.

Video: IMS Systems’ Archimedes Drive Transmission System

Even more revolutionary are approaches that completely reimagine power transmission. IMSystems' Archimedes Drive exemplifies this radical rethinking, eliminating traditional gearing in favor of rolling elements that achieve near-zero backlash with inherent shock tolerance. Chinese firm KGG Robotics’ novel planetary drive system similarly challenges convention by providing high load-bearing capacity and precise motion for humanoid robot joints such as the arms, legs, and hip joints.

These innovations address the durability and reliability demands of industrial deployment, where continuous operation in dynamic environments tests the limits of mechanical design. As these architectures mature and enter mass production, they're establishing the mechanical foundation needed for robots to escape the confines of carefully controlled factory floors.

1.2.3. Materials and Soft Robotics

Advanced materials are reshaping actuator design, pushing beyond incremental improvements to enable entirely new approaches to movement. The integration of carbon fiber composites and specialized metal alloys has transformed the core engineering equation: where traditional actuators demanded strict trade-offs between strength and weight, new materials enable unprecedented power density while enhancing thermal performance. This shift is particularly evident in humanoid platforms, where novel composites enable joint designs that match human biomechanics while maintaining the durability needed for industrial deployment.

Video: Shape-Shifting Soft Robots (MIT Seminar)

Soft robotics represents a paradigm shift from rigid mechanisms to bio-inspired designs. By leveraging elastomer-based artificial muscles and advanced materials, these systems can dynamically alter their mechanical properties like living tissue. Labs at MIT and Stanford have demonstrated capabilities previously impossible with traditional actuators – from delicate object manipulation to adaptive compliance in unstructured environments. While mass production remains a challenge, this technology promises robots that2 don't just move precisely but actively tune their physical characteristics to match task demands.

1.2.4. Elastic and Force-Controlled Actuators

Perhaps most crucial for human-robot collaboration is the mainstream adoption of Series Elastic Actuators (SEAs) and sophisticated force control. By incorporating spring elements and real-time force sensing, modern actuators can yield to unexpected contacts while maintaining precise position control. This inherent compliance enables robots to handle delicate tasks without risking damage or injury.

Video: Series Elastic Actuators (SEA)

Recent breakthroughs in backdrivable actuators have pushed these capabilities further. New mechanical architectures achieve natural compliance through innovative transmission designs that allow external forces to move the robot's joints with minimal resistance. This bidirectional force transparency – where the actuator can both apply precise force and respond fluidly to external forces – transforms how robots interact with their environment. Tasks that once required complex force feedback loops can now leverage the actuator's inherent mechanical properties.

The commercial impact is profound. Tesla's Optimus and Figure's humanoid platforms demonstrate how this sophisticated force control transforms robots from isolated industrial equipment into collaborative tools. Their actuators seamlessly handle both precision tasks and heavy lifting, establishing another milestone in creating machines that can replace human labor across diverse environments. This fusion of power with natural adaptability completes another vital piece of the hardware foundation enabling widespread robotics deployment.

1.3. On-Board Compute

The leap to true robotics demands not just better sensors and actuators, but also advances in edge computing. Where previous generations relied on off-board processing or cloud connectivity, modern humanoids integrate specialized AI accelerators that enable real-time perception and decision-making directly on the platform. This shift from tethered computing to autonomous intelligence marks another crucial milestone toward the birth of practical robotics.

Video: NVIDIA’s Jetson Thor Announcement

NVIDIA's Jetson Thor exemplifies this transformation, delivering teraflops of neural network performance in a form factor suitable for mobile robots. Tesla's approach with Optimus goes further, adapting their self-driving computer architecture to handle the heavy software workloads of a humanoid. These platforms don't just process data – they run sophisticated AI models that enable fluid adaptation to unstructured environments.

The implications extend beyond raw performance. By combining AI accelerators with efficient ARM processors and specialized robotics co-processors, manufacturers can now optimize for the delicate balance between computational power and energy consumption. This enables extended operation on battery power while maintaining the sophisticated capabilities – from real-time SLAM to natural language interaction – that define truly autonomous systems.

These breakthroughs in actuator technology shatter decades of compromises between power, precision, and adaptability. Where previous generations struggled with rigid, unsafe mechanisms unsuited for human environments, today's advances in motors, transmissions, and materials enable fluid movement without sacrificing reliability. The actuator barrier that long confined robotics to demonstrations has finally fallen.

2. Physical AI for Adaptive Intelligence

The leap from rigid automation to true robotics hinges on a revolutionary advance in machine intelligence. Physical AI fuses perception, reasoning, and action into fluid capabilities that match human adaptability, transforming robots from specialized tools into genuine replacements for labor.

2.1. Unified AI Models

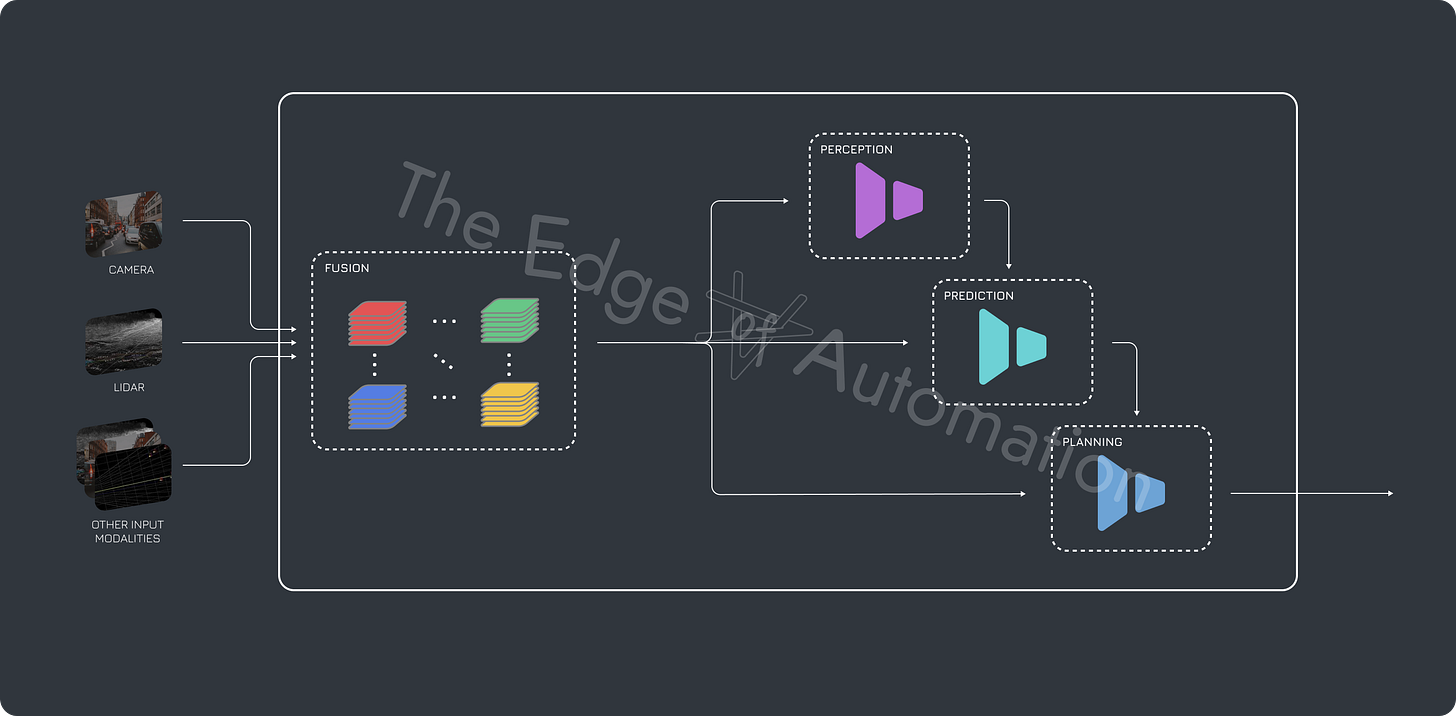

The shift from fragmented, single-purpose AI models to unified architectures represents a fundamental breakthrough in robotics. By seamlessly integrating perception, planning, and control, these new systems enable the fluid adaptation to uncertainty that defines human-like intelligence – a decisive break from decades of rigidity.

2.1.1. Multimodality and Partial Inputs

Early robotics treated each sensor stream as an isolated problem, leading to systems that failed when any single input degraded. Modern architectures instead fuse diverse data sources – vision, touch, sound, force – into unified representations that remain robust even with partial information. Projects like DETR3D and BEVFormer pioneered this approach in autonomous vehicles, showing how neural networks could seamlessly combine camera feeds, LIDAR scans, and radar signals.

The implications reach far beyond improved perception. By training networks to operate with incomplete or noisy data, robots gain resilience previously impossible with traditional approaches. For example, a humanoid can maintain balance even if a joint sensor fails, or complete a grasping task despite occluded camera views. This adaptability to sensor degradation is a major step toward machines that can handle real-world uncertainty.

2.1.2. Foundation Models

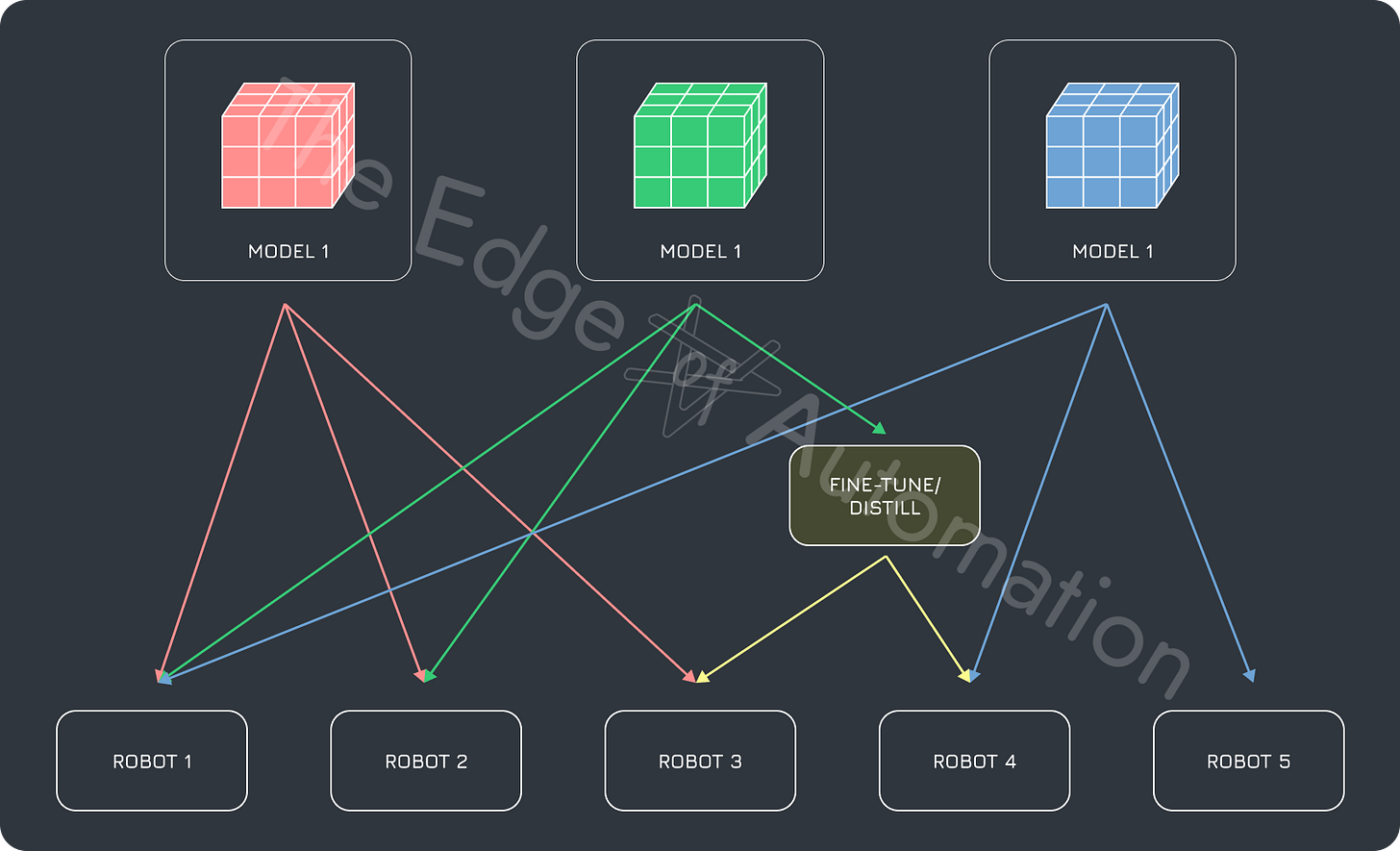

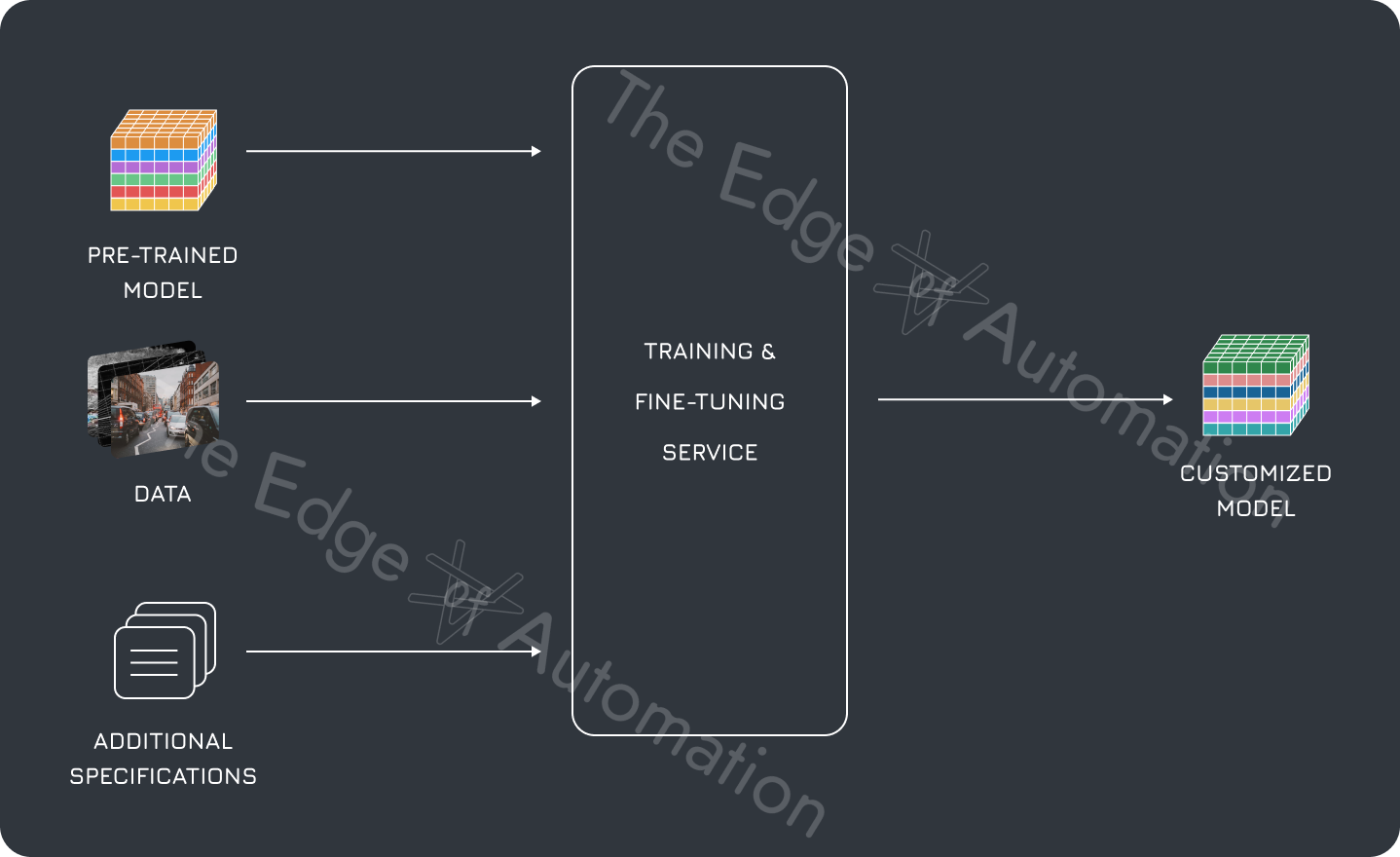

The data requirements for robust multimodal AI would have been prohibitive under manual labeling approaches. Foundation models solve this through self-supervised learning at massive scale. Networks like BEVFusion and ProFusion3D are pre-trained on vast quantities of unlabeled sensor data, learning to predict missing modalities and identify consistent patterns across different inputs. Once pre-trained, these models can be efficiently fine-tuned for specific tasks using minimal additional data.

This approach dramatically reduces integration complexity. Rather than maintaining separate codebases for every sensor configuration, robotics teams can deploy a handful of foundational networks that adapt to new hardware through targeted fine-tuning. The economic implications are profound: development cycles shrink from years to months, while reliability improves through exposure to more diverse training scenarios.

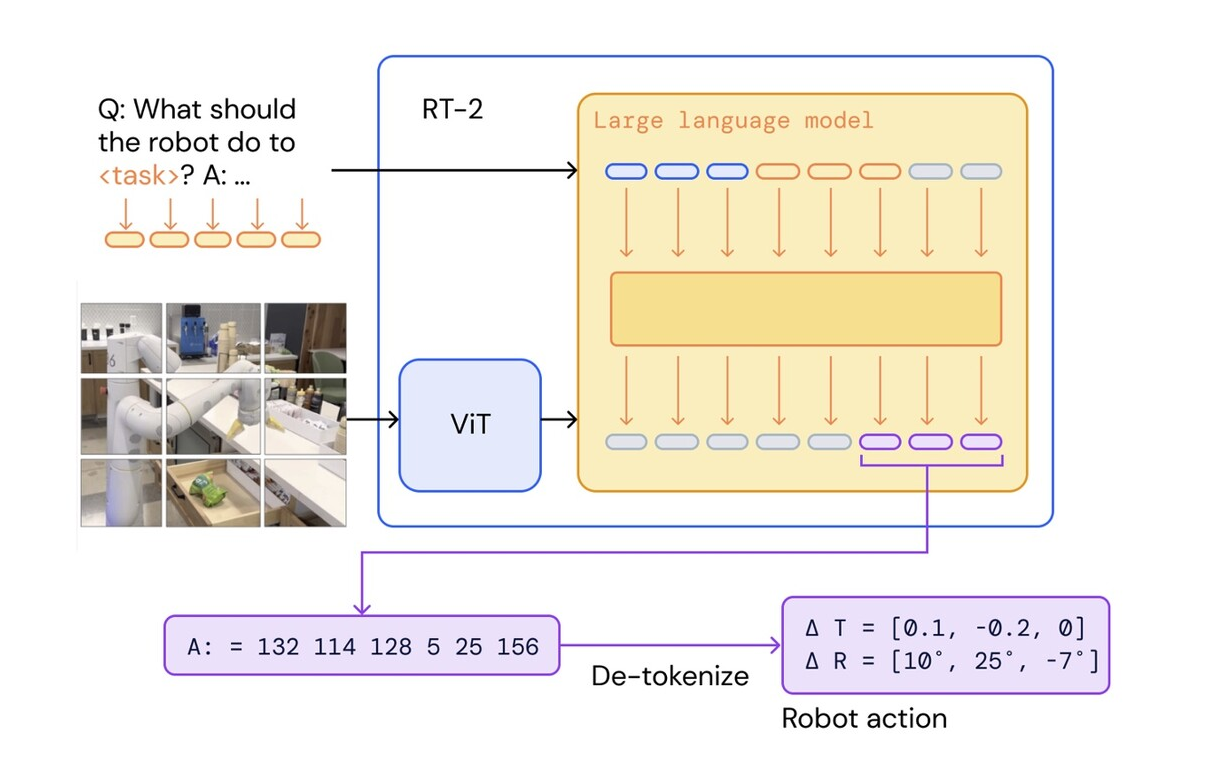

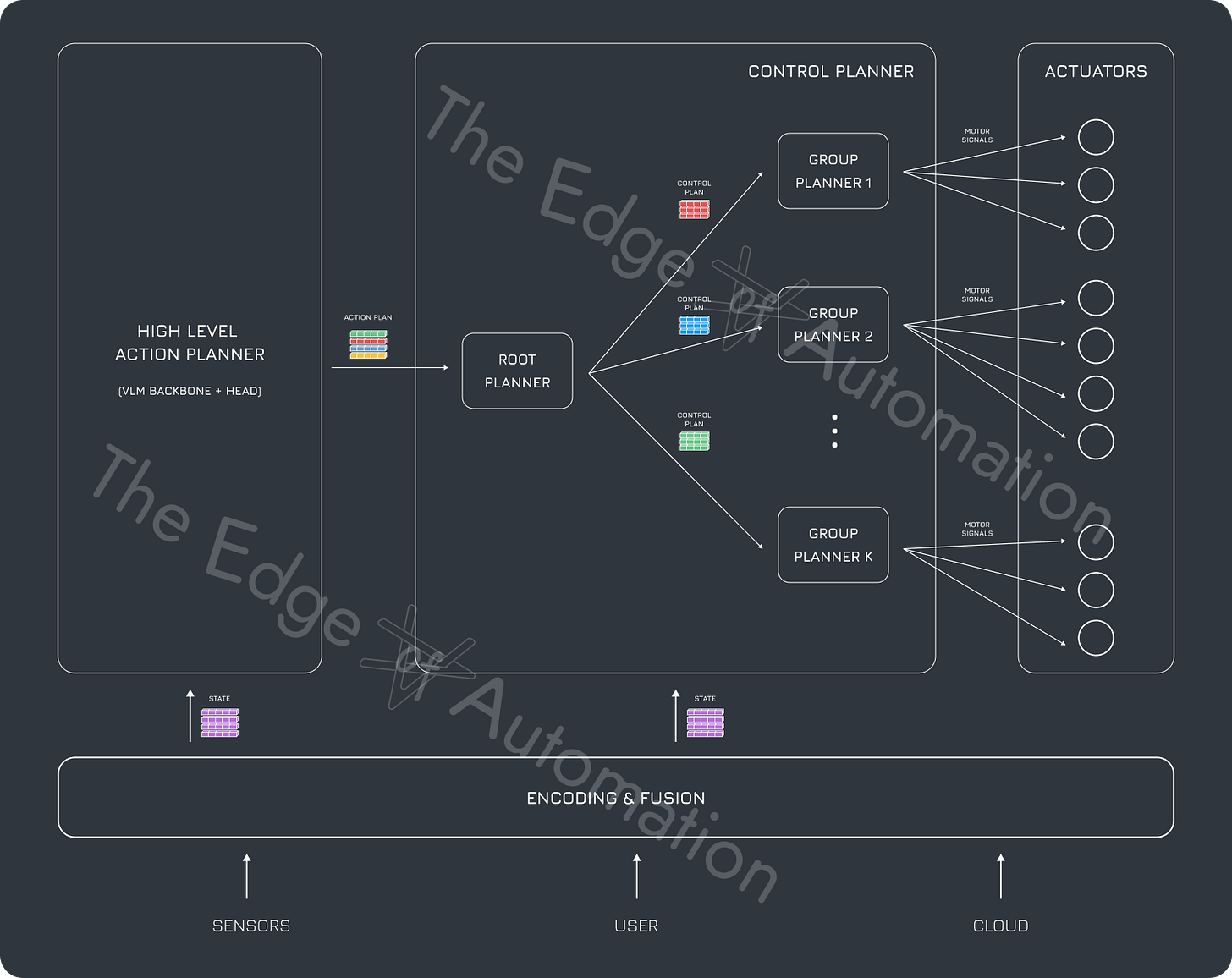

2.1.3. Vision-Language-Action Models

The emergence of large language models catalyzed a new paradigm in robotics intelligence. Early systems like SayCan demonstrated how language models could break down complex commands into actionable sequences. More recent architectures like PALM-E and RT-2 go further, directly mapping natural language and visual inputs to motor actions. A robot can now interpret instructions like "sort these items by color" without explicit programming for each step.

Vision-Language-Action (VLA) models represent a fundamental advance over traditional control hierarchies. By unifying perception, reasoning, and action generation in a single network, they enable fluid adaptation to novel situations. A humanoid equipped with well-designed VLA models can apply learned manipulation skills to unfamiliar objects or recombine known actions to solve new tasks – capabilities that were pipe dreams just a few years ago.

2.1.4. AI-Driven Control and Adaptability

Traditional approaches in low-level control relied on hand-tuned parameters and rigid control laws. Modern systems instead use AI policies trained on motion capture data and physics simulations to coordinate multiple joints simultaneously. Tesla's Optimus exemplifies this shift: its actuator controllers learn from millions of simulated interactions, automatically adjusting to different loads and surface conditions.

AI-driven control extends to real-time adaptation. Specialized microcontrollers can run local policies that modify joint damping and stiffness on the fly, while higher-level networks coordinate overall motion strategies. The result is robots that move with unprecedented fluidity and resilience, capable of recovering from disturbances that would have crashed earlier systems.

At Quintet AI, we build upon this foundation by offering plug-and-play AI models –from core perception engines to universal control policies, enabling clients to deploy robots at fraction of the time than is traditionally required.

Multimodal fusion, foundation models, VLA architectures, replace the brittle, unintelligent, and firmware-like software systems of traditional robotics. Robots can now perceive, reason about, and interact with their environment through unified intelligence frameworks that improve with experience.

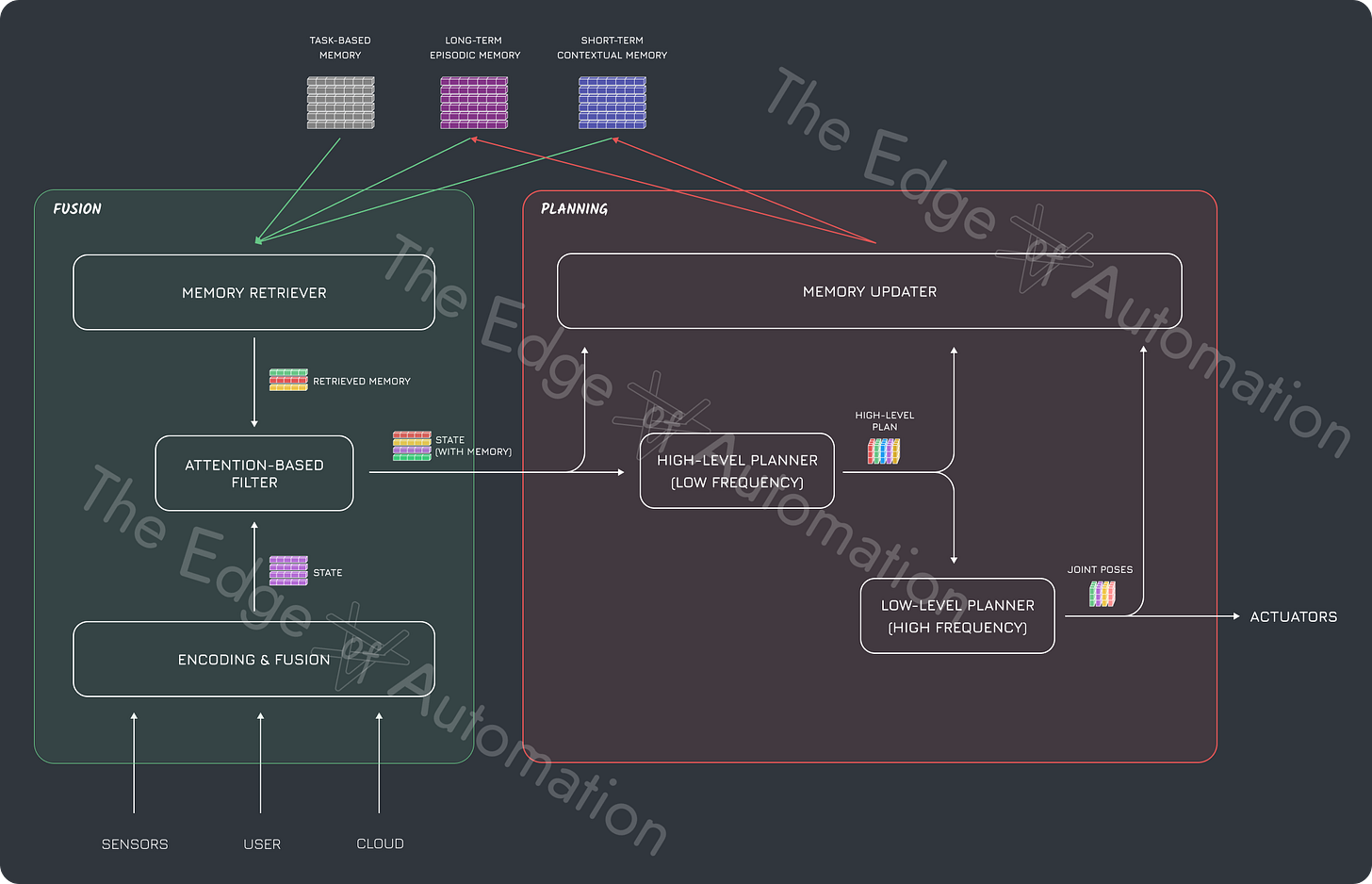

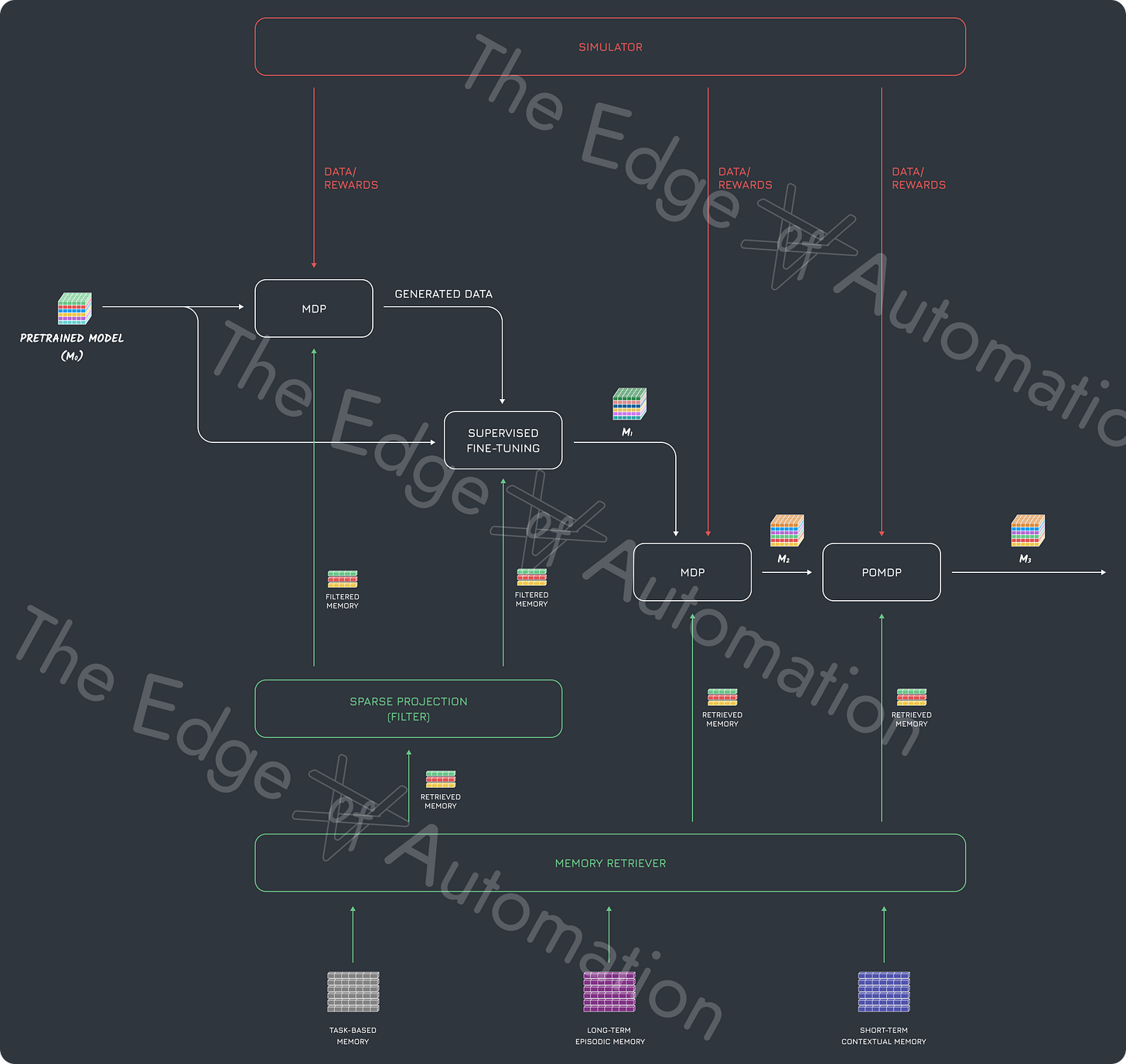

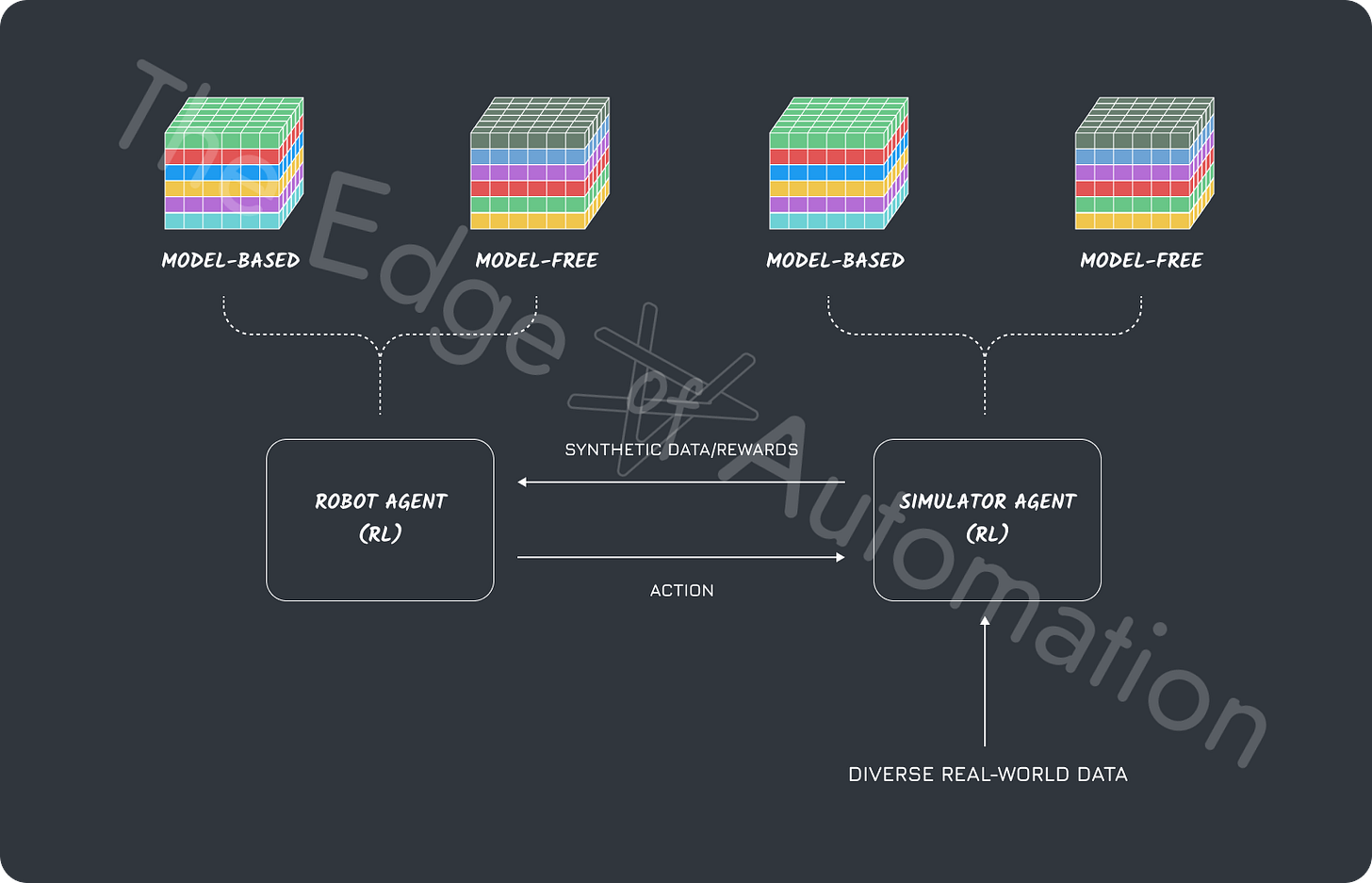

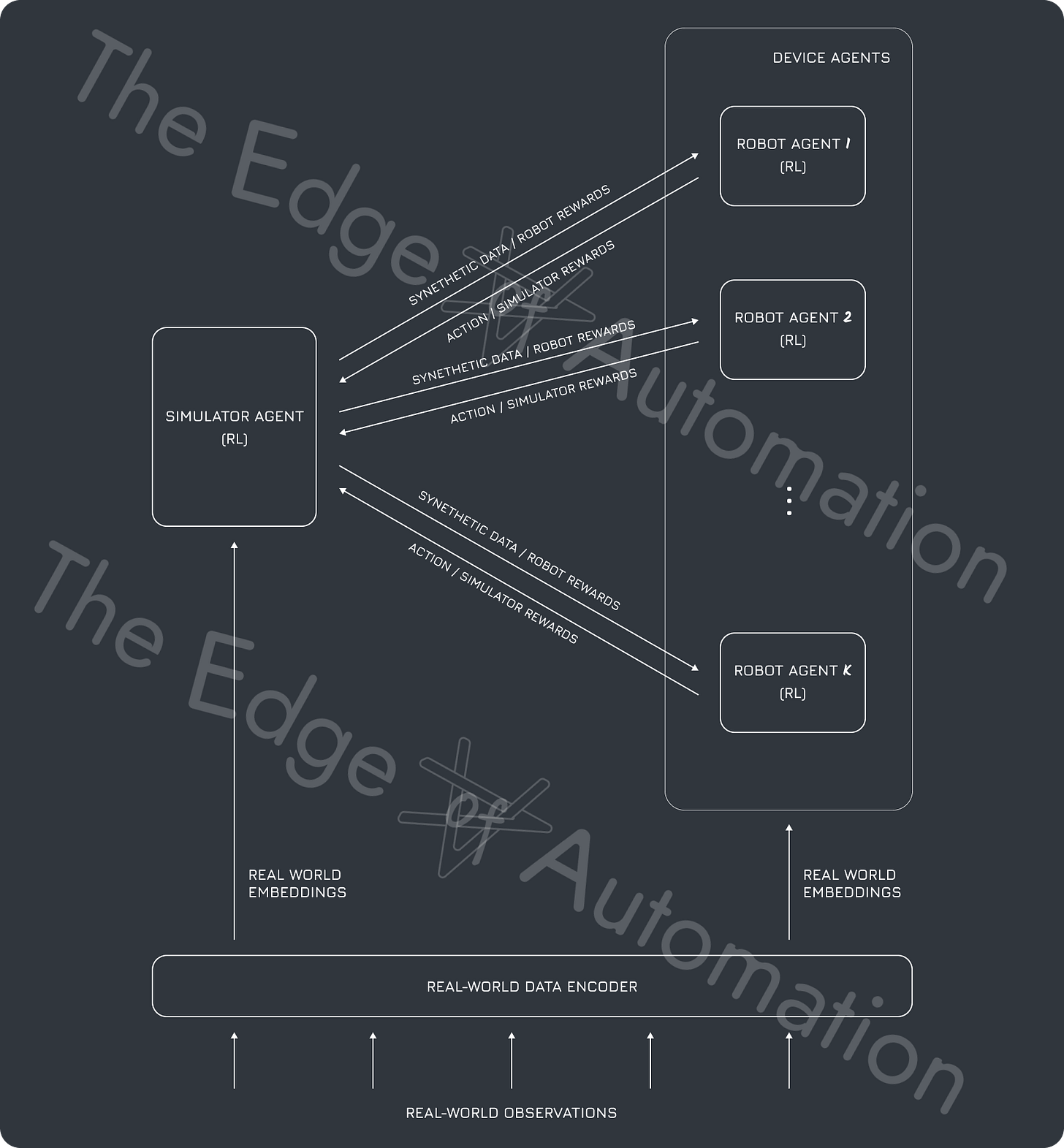

2.2. Reasoning and Memory-Based Models

Even the most sophisticated multimodal models struggle with long-horizon tasks that require strategic planning and continuous adaptation. Reasoning and memory architectures fill this gap, enabling robots to maintain context across long sequences, correct errors mid-task, and learn from experience.

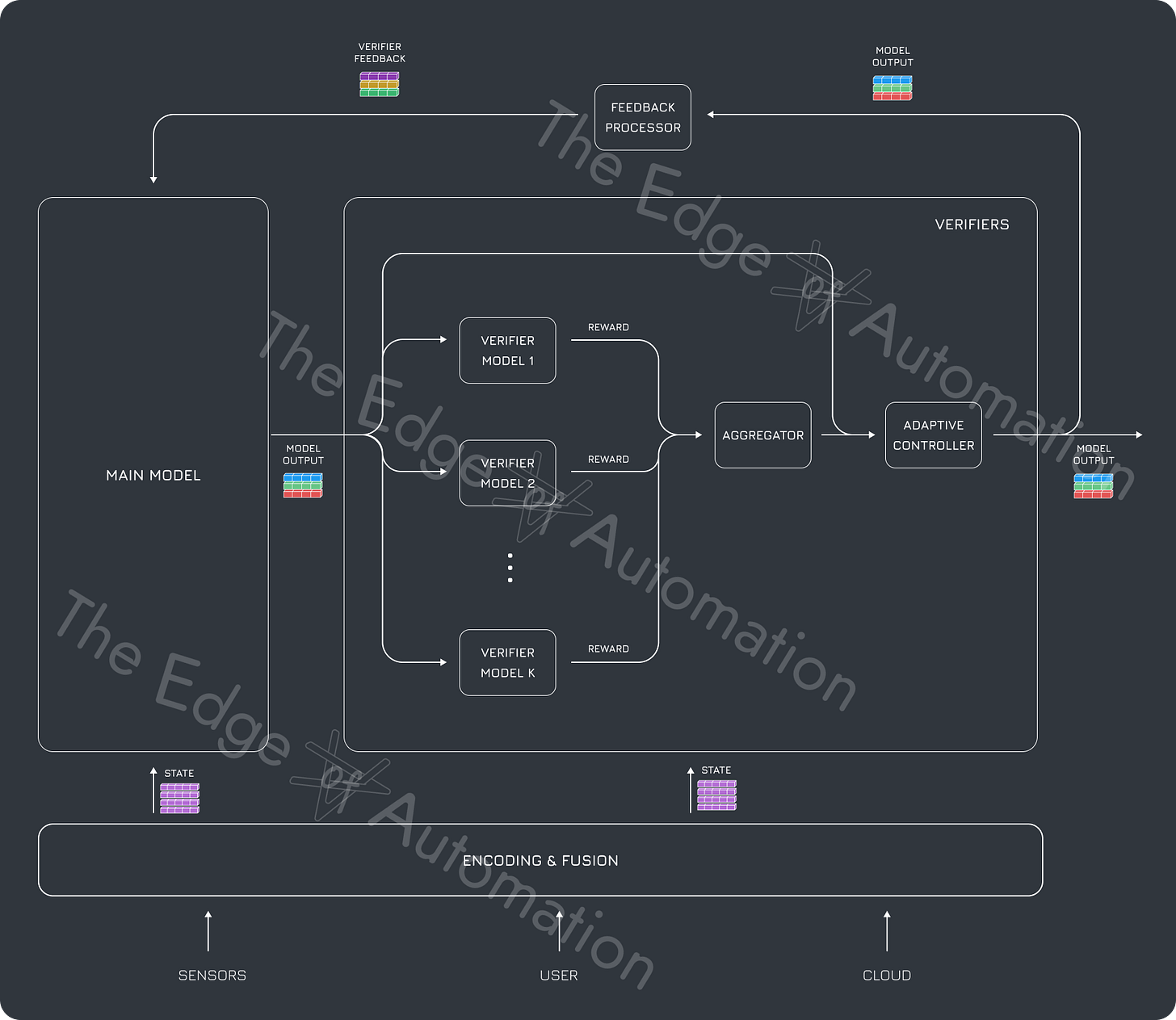

2.2.1. Test-Time Compute

The traditional emphasis on massive training-time computation overlooks a crucial insight: robots need real-time reasoning capabilities to handle dynamic environments. Test-time Compute (TTC) revolutionizes this paradigm by allocating substantial processing power during inference rather than just during training. This approach enables robots to simulate outcomes, verify decisions, and adapt plans on the fly – much like humans mentally rehearse actions before execution.

For example, a humanoid stacking boxes might run multiple forward simulations to test different grip positions or sequence variations, using a lightweight verifier model to catch potential failures before they occur. This real-time reasoning capability, achieved with models as small as 1.5B parameters (often at the expense of inference-time latency), shows a fundamental advancement over the traditional "act-then-correct" approaches.

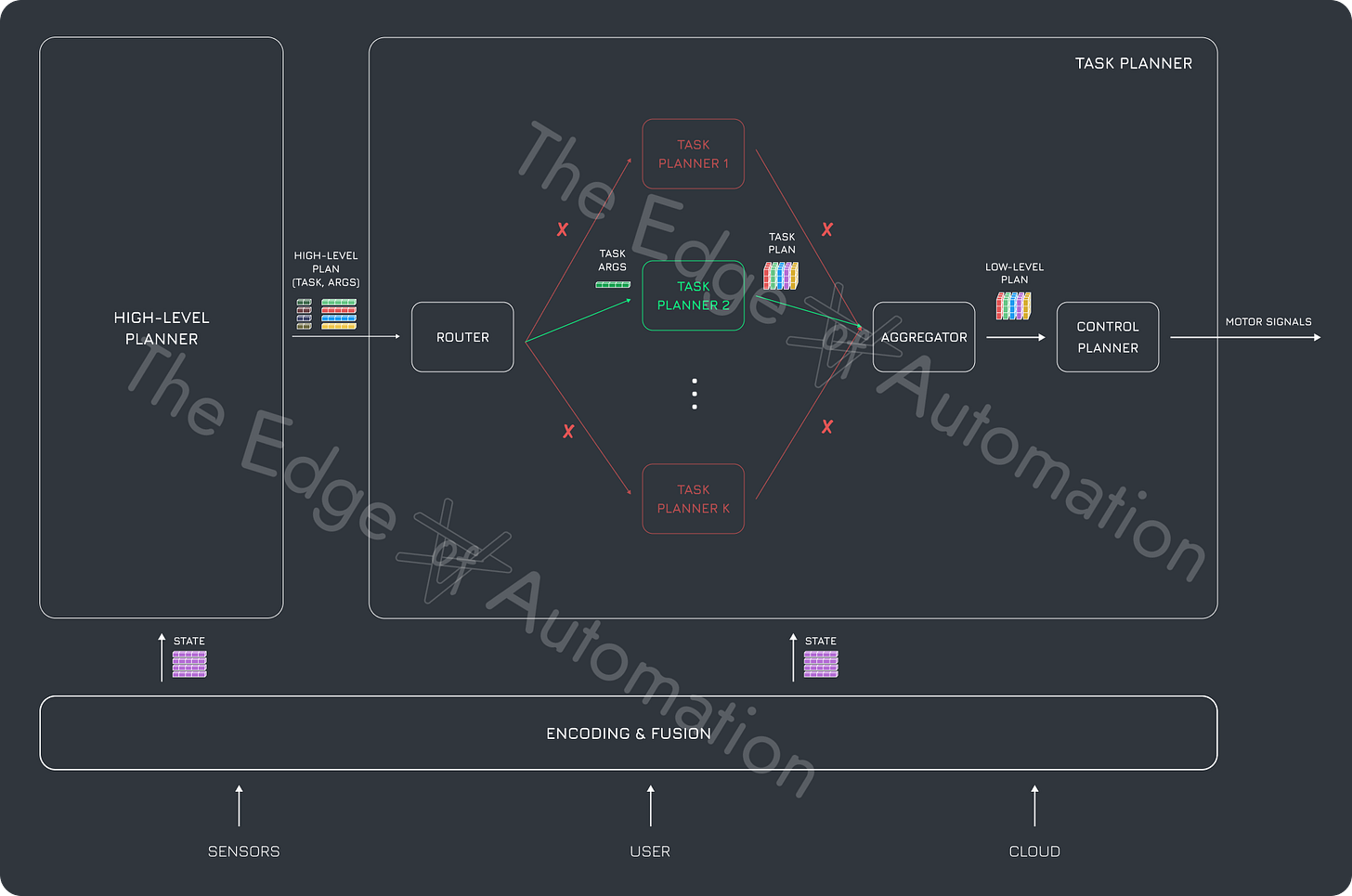

2.2.2. Advanced AI Reasoners

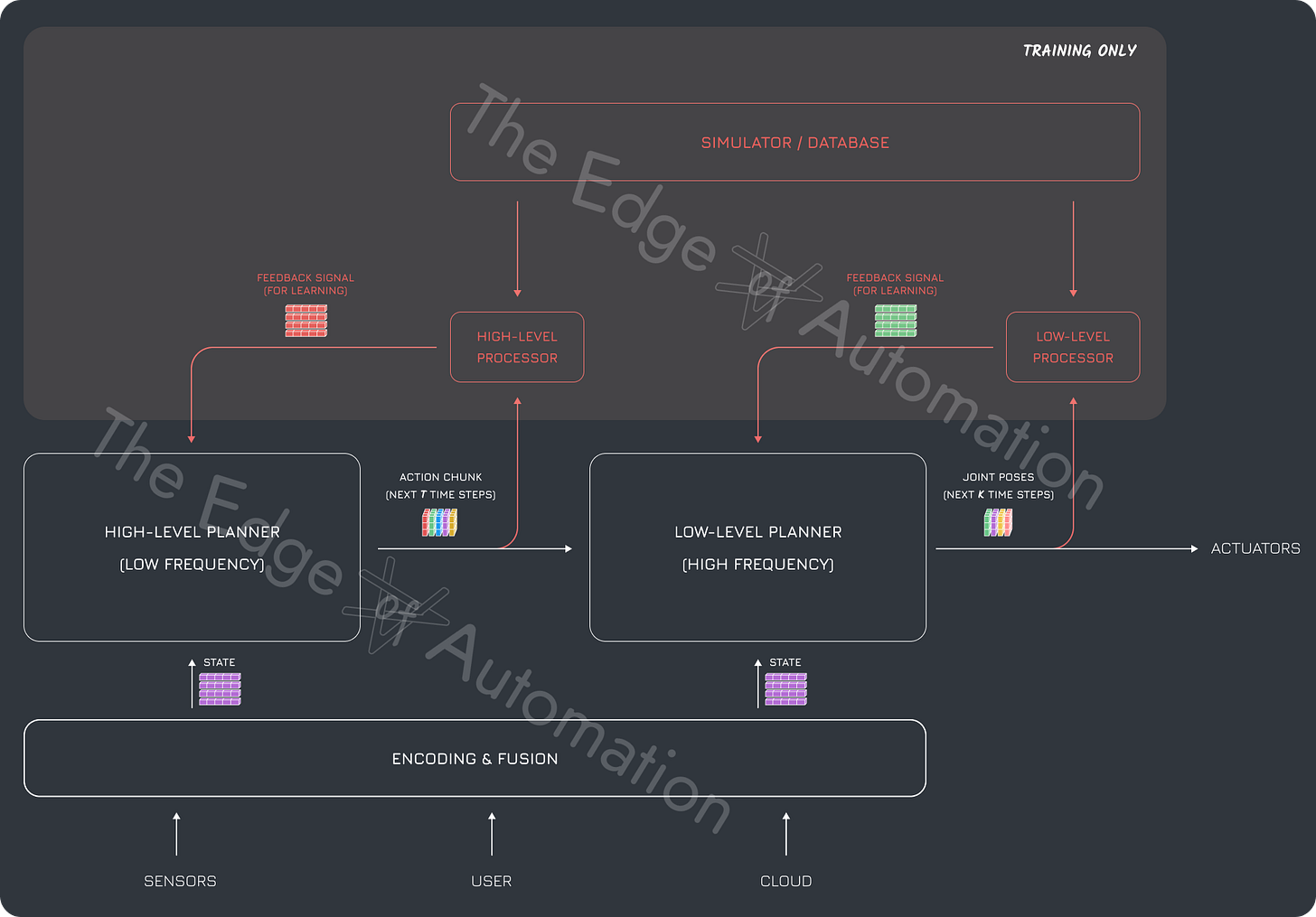

Recent breakthroughs in AI architecture have eliminated the need for iterative verification by enabling sophisticated reasoning in a single forward pass. Models like DeepSeek-R1 demonstrate how careful combinations of supervised fine-tuning and reinforcement learning can produce high-level reasoning capabilities without relying on external verifiers or feedback loops. This architectural innovation adds momentum toward the creation of robots that can plan and execute complex tasks with unprecedented efficiency.

The impact on robotics proves particularly powerful through "action chunking" – where models directly output coordinated sequences of actions divided into achievable subgoals. Stanford's CoT-VLA is an example of such model. Rather than continuously replanning each movement, robots can generate coherent action plans that maintain flexibility while reducing computational overhead. This capability proves crucial for complex manipulation tasks, where fluid coordination between perception, planning, and motor control determines success or failure.

During training, these action chunks are refined against multiple objectives: matching desired robot states, aligning with human demonstrations, and optimizing for task completion. The result is systems that can decompose complex goals into executable sequences while maintaining adaptability to environmental changes. A humanoid tasked with organizing a workspace, for instance, can break down the overall objective into a series of coordinated movements – from clearing surfaces to proper item placement – while smoothly handling interruptions or unexpected obstacles. This breakthrough in planning efficiency, combined with advances in perception and control, marks a significant advancement toward robots that can truly replace human labor.

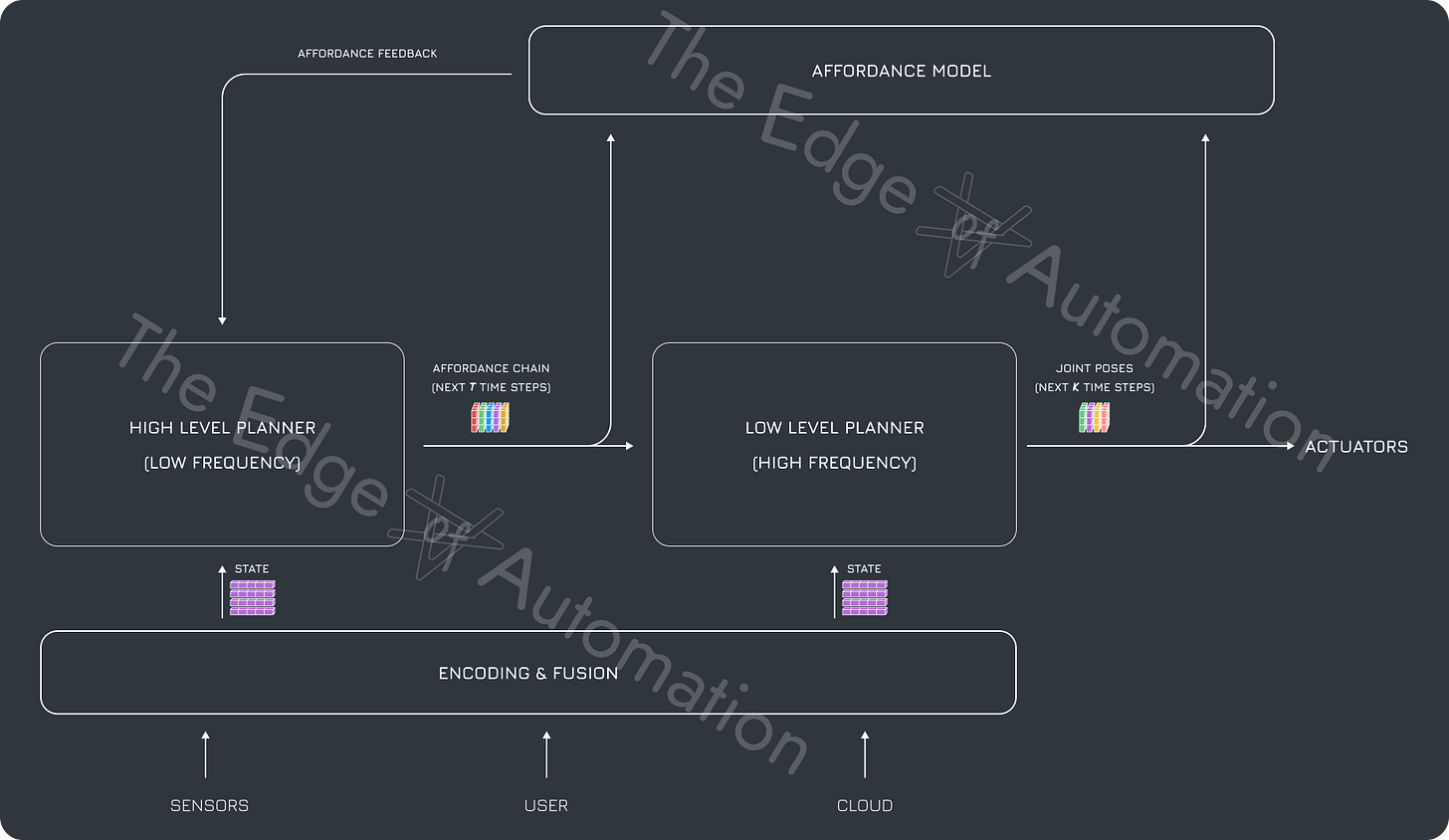

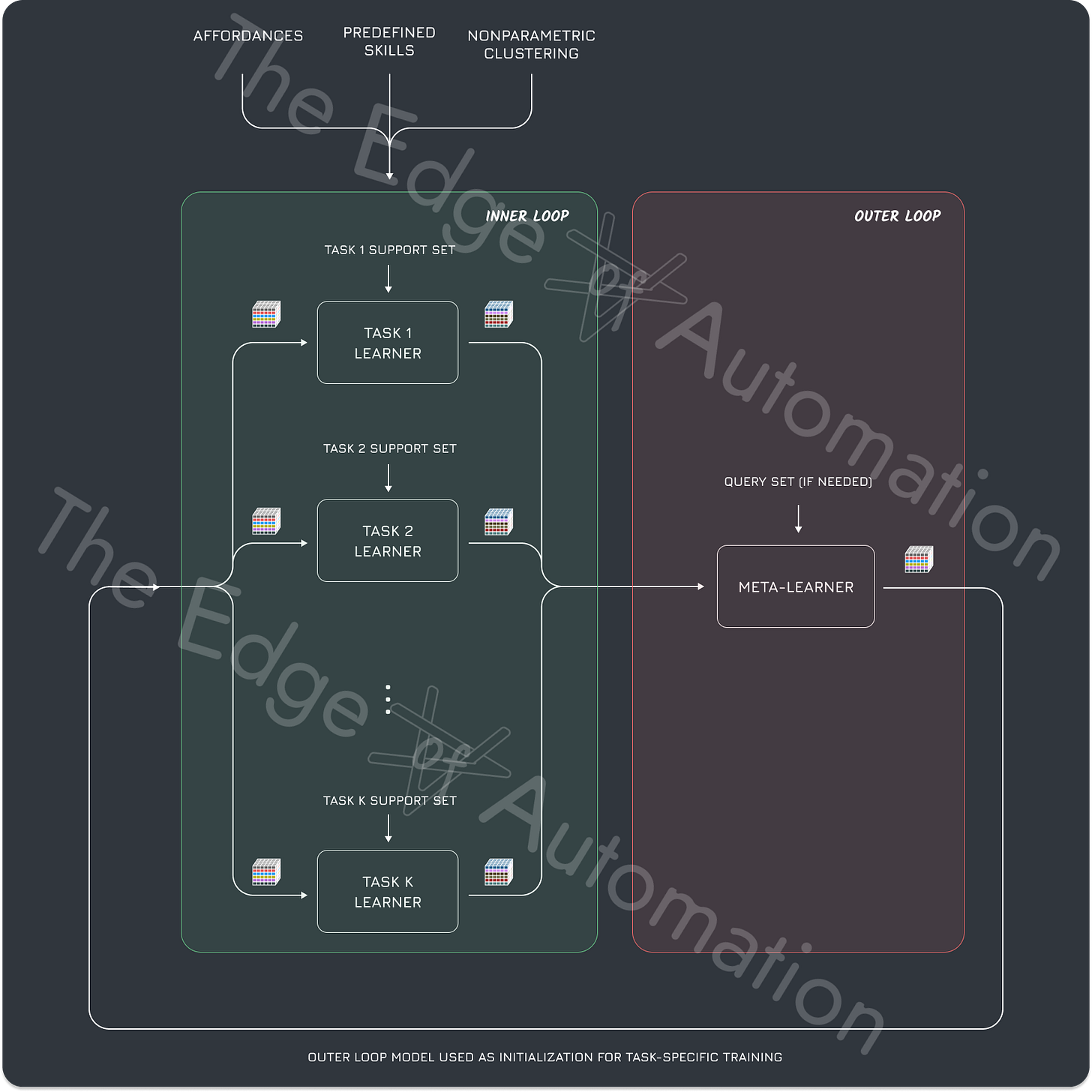

2.2.3. Chain of Affordances

The Chain-of-Affordances (CoA) framework transforms how robots interact with their environment by treating potential actions as an interconnected network. Each "affordance" – a possible interaction like opening a door or gripping a tool – becomes a building block that can be dynamically combined into complex behaviors, enabling robots to develop new capabilities through experience.

Video: Vision-Language-Action Model with a Chain of Affordances

This flexibility is essential for robots operating in unstructured environments. Rather than failing when encountering unfamiliar situations, CoA-equipped robots can adapt by combining known affordances in novel ways, similar to how humans instinctively adapt familiar skills to new challenges. This adaptability makes robots more effective in real-world applications where conditions frequently change and preset solutions may not suffice.

The most profound advance comes from systems that discover interaction possibilities through direct experience rather than human definition. While fixed industrial arms can rely on prescribed affordances, general purpose mobile manipulators demand more fluid understanding. Modern AI systems break this limitation through end-to-end affordance learning via reinforcement learning and self-supervised exploration. Combined with advanced simulation, this self-discovered knowledge enables intuitive environmental adaptation.

Quintet AI's affordance AI modules enable robots to autonomously chain complex sequences – from object manipulation to navigation to storage tasks – without requiring explicit programming for each step.

The CoA framework enhances human-robot collaboration by making robots more responsive to nuanced tasks. From high-precision surgery to dynamic manufacturing environments, CoA-driven systems are advancing automation toward greater contextual intelligence.

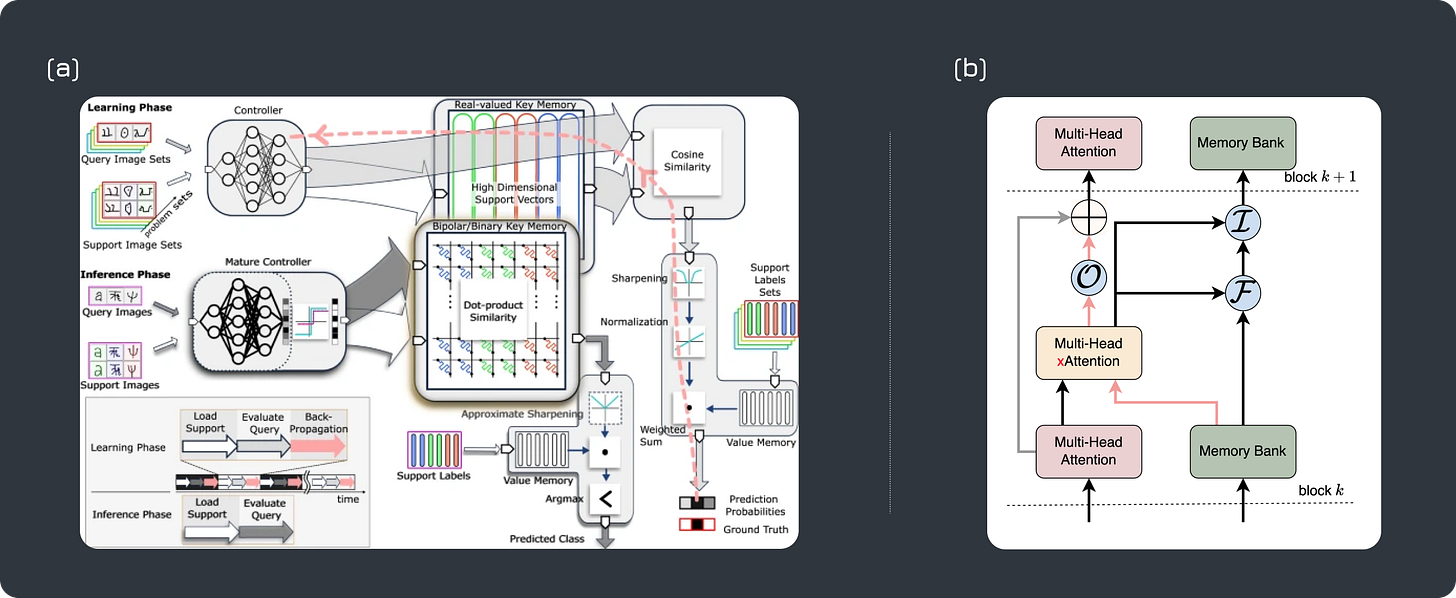

2.2.4. Memory for Long-Horizon Tasks

The final piece of advanced reasoning comes from architectural innovations in robot memory systems. While transformer models excel at processing immediate inputs, they struggle to maintain context across extended operations. New memory architectures solve this through specialized modules that track task progress, environmental changes, and learned experiences.

An example of a memory-based model is the Robust High-Dimensional Memory-Augmented Neural Networks (HD-MANN), which improves learning efficiency by integrating external memory modules for high-dimensional embeddings, reducing data needs and training time. Similarly, the Large Memory Model (LM2) enhances Transformer performance in multi-step reasoning and long-context synthesis. With an auxiliary memory module and gated updates, LM2 effectively captures long-term dependencies in sequential data, improving adaptability and retention.

These systems demonstrate how memory-based models enable robots to handle complex sequences without losing track of overall goals or previous actions . A warehouse robot organizing inventory can seamlessly resume after interruptions, remember which areas have been processed, and maintain consistency across shifts – capabilities essential for true automation of human-level work.

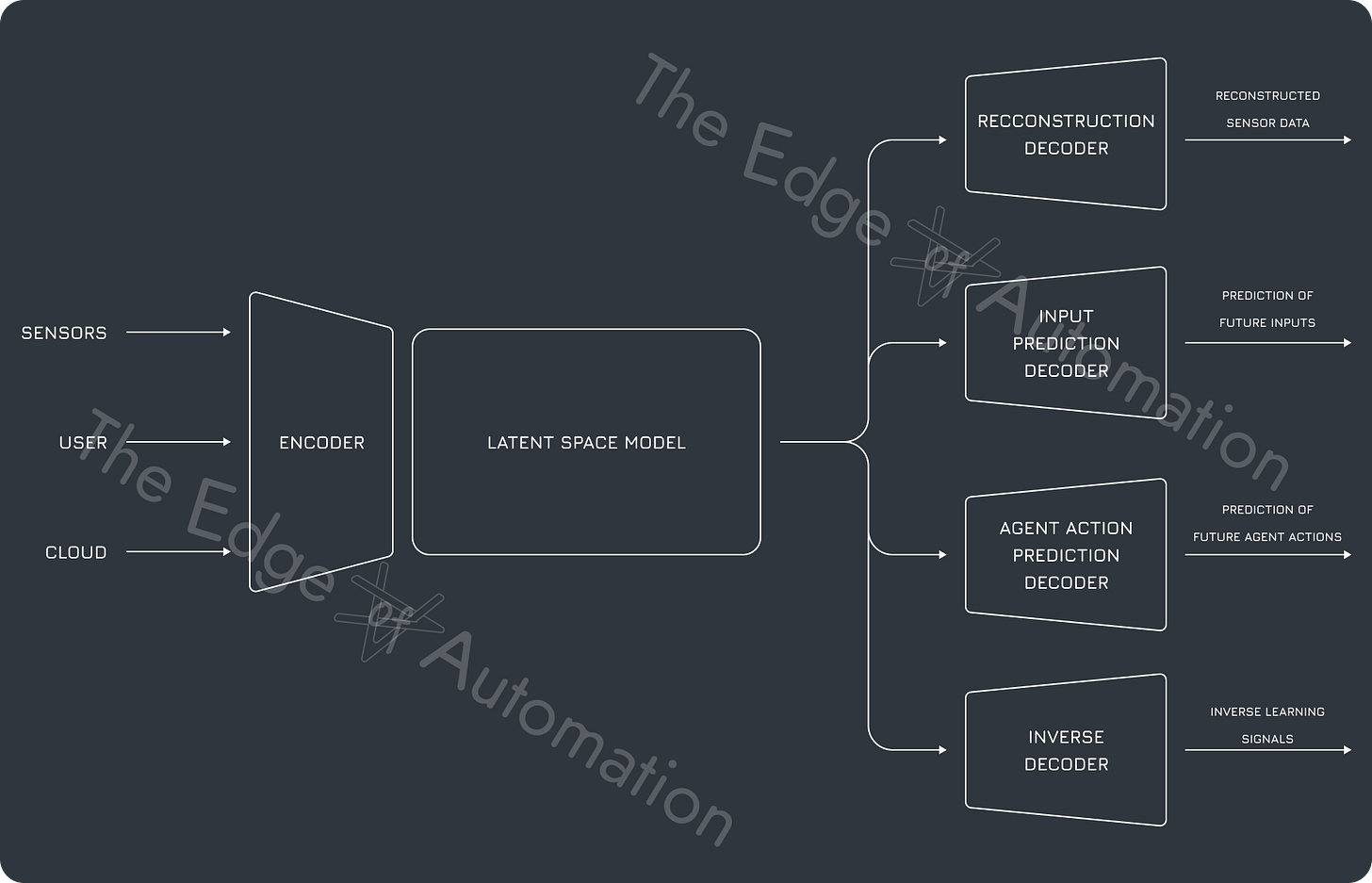

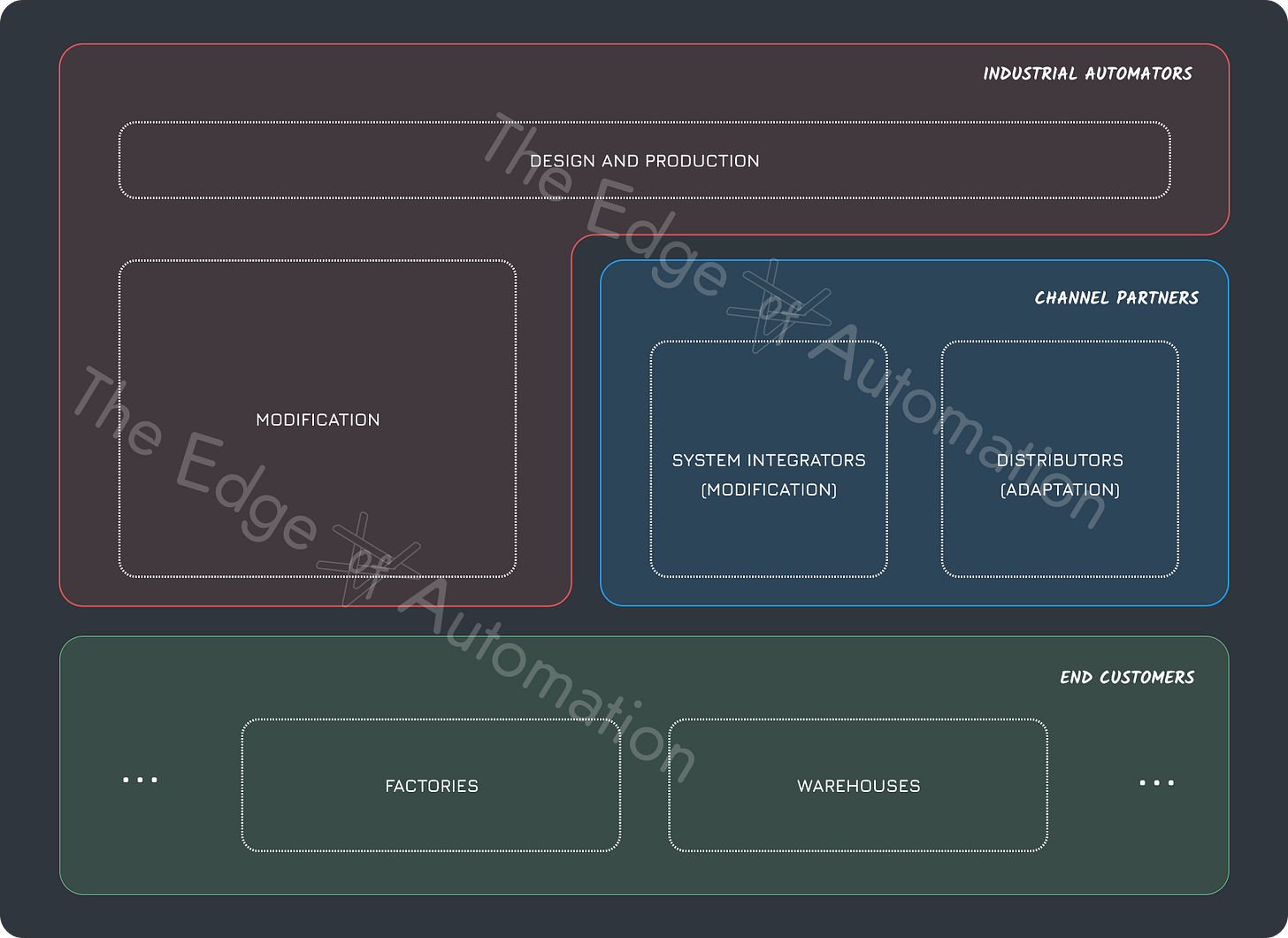

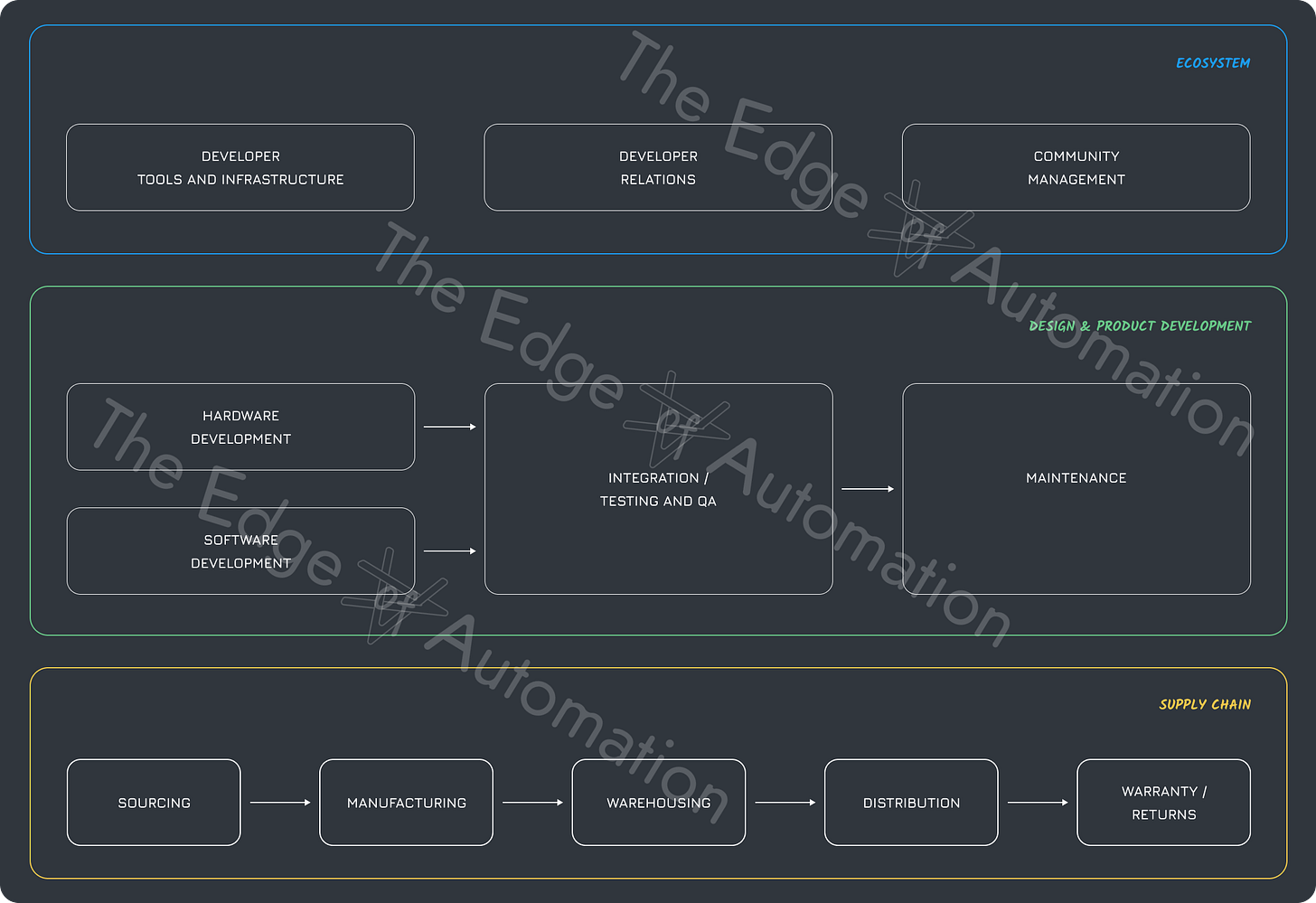

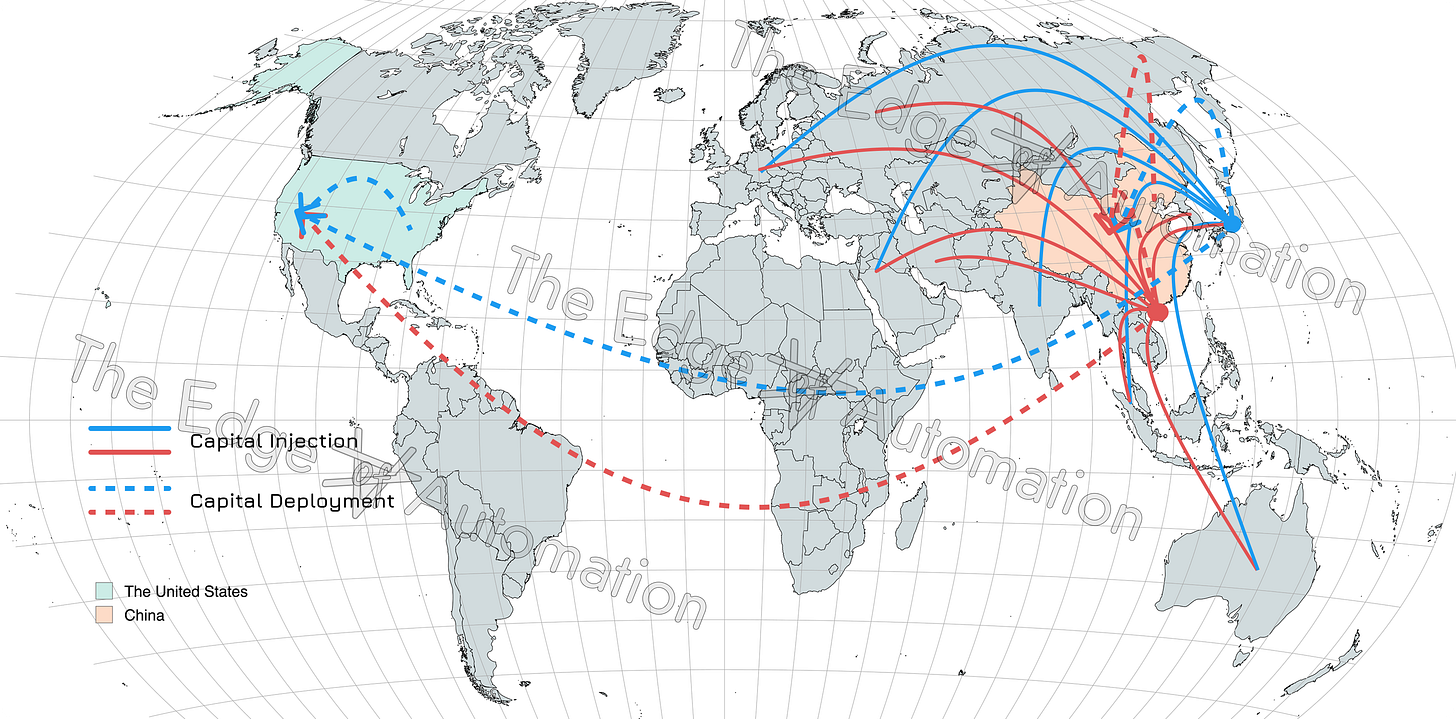

At Quintet AI, our memory-based models enable robots to handle extended sequences without losing track of subtle state changes or incomplete steps. Our latest innovation, a model that "learns to remember" during inference, has dramatically improved the reliability of humanoids and factory robots in multi-stage tasks like inventory management and assembly.